Abstract

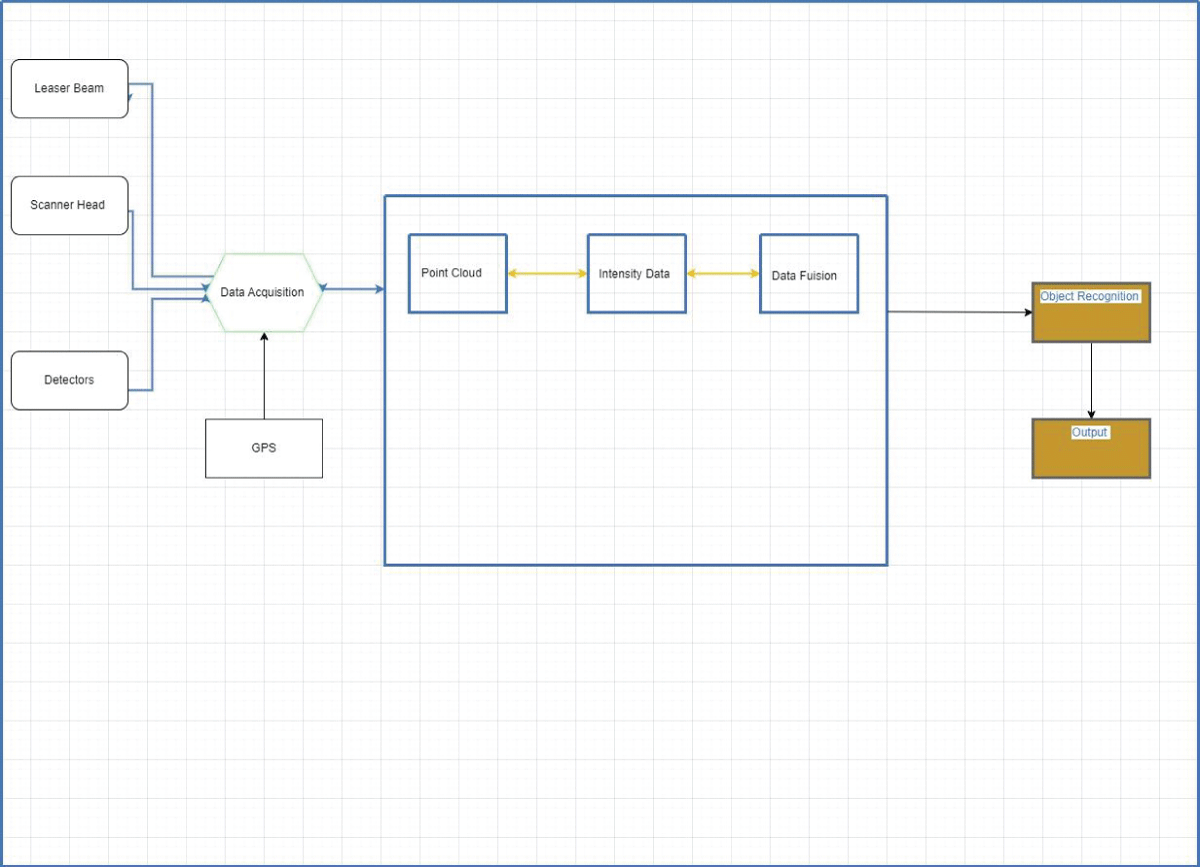

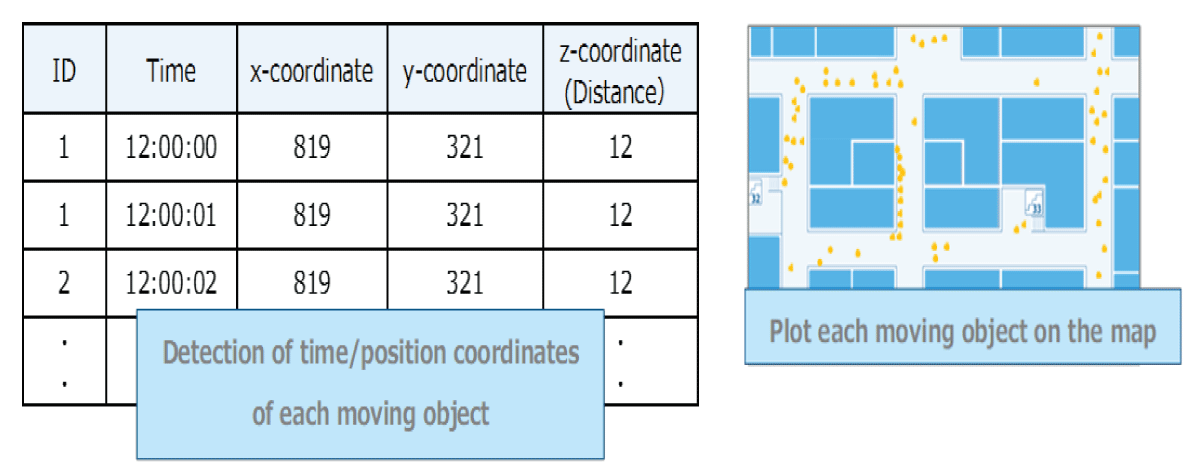

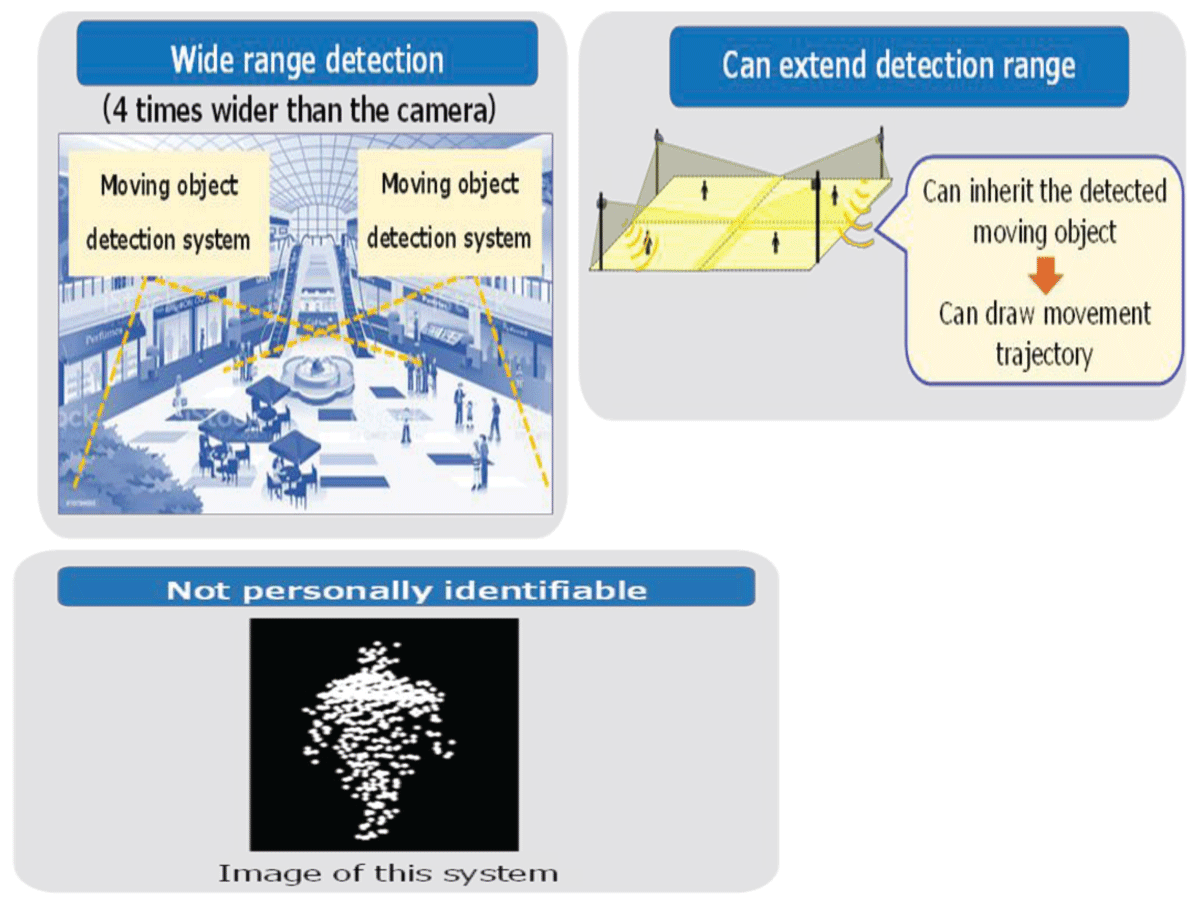

The “System for Detecting Moving Objects Using 3D LiDAR Technology” introduces a groundbreaking method for precisely identifying and tracking dynamic entities within a given environment. By harnessing the capabilities of advanced 3D LiDAR (Light Detection and Ranging) technology, the system constructs intricate three-dimensional maps of the surroundings using laser beams. Through continuous analysis of the evolving data, the system adeptly discerns and monitors the movement of objects within its designated area. What sets this system apart is its innovative integration of a multi-sensor approach, combining LiDAR data with inputs from various other sensor modalities. This fusion of data not only enhances accuracy but also significantly boosts the system’s adaptability, ensuring robust performance even in challenging environmental conditions characterized by low visibility or erratic movement patterns. This pioneering approach fundamentally improves the precision and reliability of moving object detection, thereby offering a valuable solution for a diverse array of applications, including autonomous vehicles, surveillance, and robotics.