System for Detecting Moving Objects Using 3D Li-DAR Technology

Robotics BioengineeringSensorsSignal ProcessingReceived 02 Apr 2024 Accepted 13 Apr 2024 Published online 15 Apr 2024

ISSN: 2995-8067 | Quick Google Scholar

Previous Full Text

Qualitative Model of Electrical Conductivity of Irradiated Semiconductor

Received 02 Apr 2024 Accepted 13 Apr 2024 Published online 15 Apr 2024

The “System for Detecting Moving Objects Using 3D LiDAR Technology” introduces a groundbreaking method for precisely identifying and tracking dynamic entities within a given environment. By harnessing the capabilities of advanced 3D LiDAR (Light Detection and Ranging) technology, the system constructs intricate three-dimensional maps of the surroundings using laser beams. Through continuous analysis of the evolving data, the system adeptly discerns and monitors the movement of objects within its designated area. What sets this system apart is its innovative integration of a multi-sensor approach, combining LiDAR data with inputs from various other sensor modalities. This fusion of data not only enhances accuracy but also significantly boosts the system’s adaptability, ensuring robust performance even in challenging environmental conditions characterized by low visibility or erratic movement patterns. This pioneering approach fundamentally improves the precision and reliability of moving object detection, thereby offering a valuable solution for a diverse array of applications, including autonomous vehicles, surveillance, and robotics.

In recent years, the demand for accurate and reliable moving object detection systems has escalated across various industries, including autonomous vehicles, surveillance, and robotics. Traditional approaches often face challenges in accurately identifying and tracking objects in dynamic and complex environments. To address these limitations, this paper presents a novel “System for Detecting Moving Objects Using 3D LiDAR []. Technology.” By integrating advanced 3D LiDAR technology with a multi-sensor fusion approach, this system offers unparalleled precision and adaptability, representing a significant advancement in the field of moving object detection. Three-dimensional Light Detection and Ranging (3D LiDAR) is an advanced sensing technology [] that utilizes laser light for accurate distance measurement and spatial mapping. Often referred to as optical radar or laser radar, 3D LiDAR systems play a crucial role in capturing detailed and real-time information about the environment in three dimensions. This technology has found widespread applications across various industries, owing to its precision and versatility []. As urban environments become increasingly complex and dynamic, the need for robust and reliable moving object detection systems is paramount. Traditional surveillance methods, relying heavily on 2D imaging, often face challenges in accurately capturing the spatial and temporal aspects of moving objects. The integration of 3D LiDAR technology into detection systems presents a promising solution, enabling a more comprehensive understanding of the environment.

The research objective is to design and implement a system that leverages the unique capabilities of 3D LiDAR for real-time detection of moving objects []. By harnessing the high-resolution point cloud data generated by the LiDAR sensor, the proposed system aims to overcome limitations associated with traditional approaches, offering improved accuracy and reliability in identifying and tracking dynamic entities within a given space. This introduction outlines the motivation behind the research, emphasizing the need for advanced detection systems [] in the face of evolving urban landscapes. Subsequent chapters will delve into the theoretical foundations of 3D LiDAR technology, the methodology employed in system development, experimental results, and a comprehensive analysis of the system’s performance. Through this investigation, we aspire to contribute to the growing body of knowledge in the realm of intelligent surveillance and advance the capabilities [] of moving object detection systems using 3D LiDAR technology. We’re on a mission to make moving object detection systems better using 3D LiDAR technology. In the next chapters, we’ll explore what others have found in object detection, looking at real-world situations and environmental factors. We’ll also explain how LiDAR works and how it can improve detection. At the end, we’ll share the details of what we discovered in our experiments.

LiDAR employs laser light for remote sensing, enabling the measurement of distances and the generation of intricate, three-dimensional depictions of the environment []. In the context of detecting moving objects, this system plays a crucial role in enhancing situational awareness and improving the safety and efficiency of various applications, such as autonomous vehicles, surveillance systems, and robotics []. The related work for this system involves reviewing and analyzing existing research, technologies, and methodologies in the field of moving object detection using 3D LiDAR. Here is a description of some key aspects of related work: LiDAR Technology in Object Detection: Explore previous studies and systems that have employed LiDAR technology for object detection. Understand the strengths and limitations of using LiDAR in comparison [] to other sensor technologies. Highlight advancements in LiDAR hardware and signal processing algorithms.

Moving Object Detection Algorithms: Investigate various algorithms that have been developed for detecting moving objects in 3D point cloud data obtained [] from LiDAR sensors. This could include traditional methods such as clustering and segmentation, as well as more advanced techniques like deep learning-based approaches.

Integration with Sensor Fusion: Examine research on the integration of 3D LiDAR data with data from other sensors [] such as cameras or radar. Sensor fusion is crucial for improving the accuracy and reliability of moving object detection, especially in challenging environmental conditions [].

3D LiDAR (Light Detection and Ranging) systems have become integral tools in mapping and sensing applications, ranging from autonomous vehicles and robotics to environmental monitoring []. The core design of a 3D LiDAR system incorporates a fusion of hardware and software components, defining its unique capabilities in capturing and interpreting intricate spatial data.

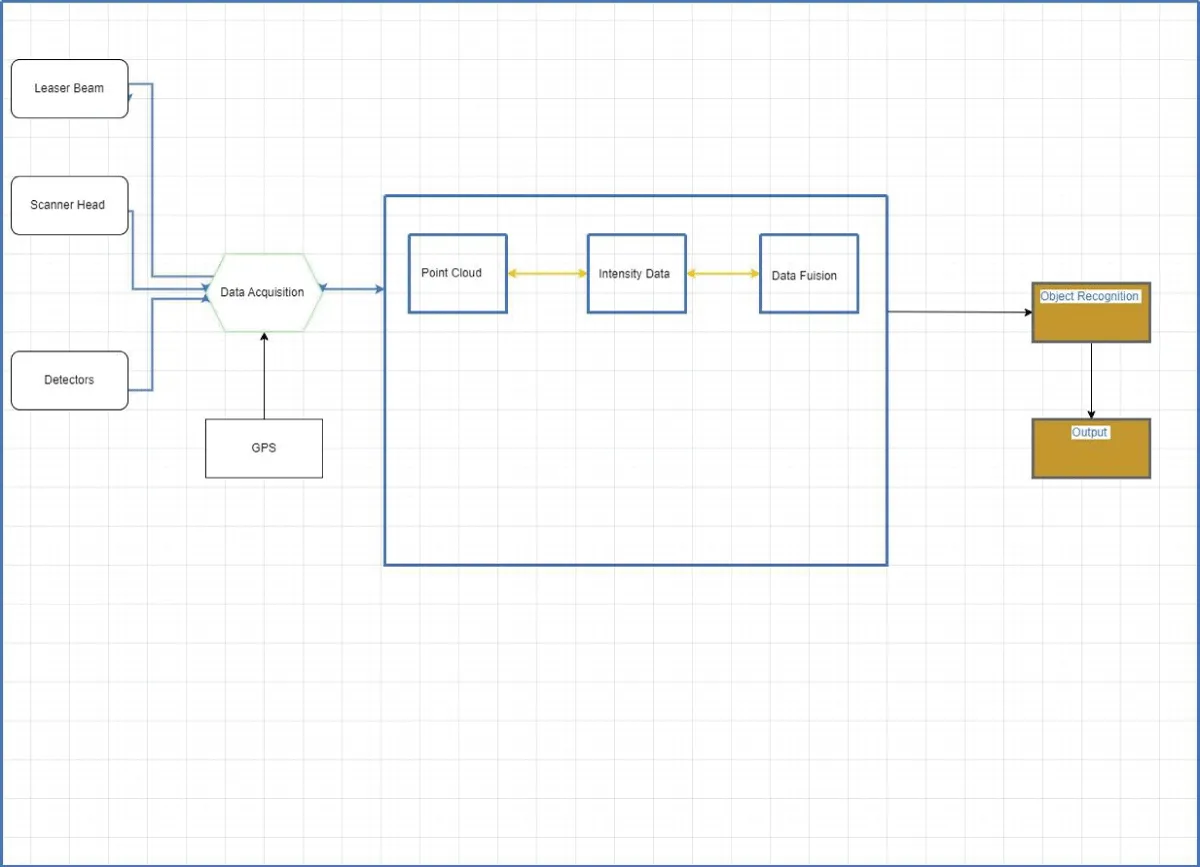

For Data Acquisition, the system required some components which are given below. The LiDAR sensor scans its surroundings by emitting laser beams in different directions []. The rotating mirror or prism helps in obtaining a 360-degree view. The time-of-flight information is recorded for each laser beam, resulting in a point cloud.

Laser scanner (PIR sensor): The core component that emits laser beams and measures the time it takes for the beams to return after hitting an object. This data is used to calculate the distance to the object. 2. Motor/Scanner Head: Many LiDAR systems use a rotating mirror or prism to redirect the laser beams [] in different directions, allowing the system to scan a full 360-degree area.3.Detectors/Receivers: Receive the laser beams reflected off objects and measure the time-of-flight to calculate distances accurately.4.GPS (Global Positioning System): Provides the geographic location of the LiDAR sensor [].

Data processing: Point Cloud Generation: The raw data is processed to generate a point cloud, which is a collection of 3D points in space []. Each point corresponds to a surface or object detected by the LiDAR. Intensity Data: Some LiDAR systems also measure the intensity of the returned laser beams []. Providing information about the reflectivity of surfaces. Data Fusion (optional): LiDAR data can be fused with other sensor data [], such as cameras or radar, to improve accuracy and object recognition.

Object recognition and filtering: Algorithms are applied to identify and classify objects within the point cloud []. Here we used YOLOv5 for detection objects. This may include identifying obstacles, terrain, and other relevant features.

Output: The final output may include a 3D representation of the environment, annotated with identified objects and their characteristics. Output formats may include point cloud data, sensor fusion data [], or higher-level information depending on the application. In applications like autonomous vehicles or robotics, the processed LiDAR data [] is often integrated into control systems to make real-time decisions based on the environment. The processed data can be visualized using 3D rendering techniques, allowing users to interact with and interpret the information. Overall, the basic structure of a 3D LiDAR system involves the acquisition of raw data, processing and filtering of that data, and generating meaningful output for further use in applications such as navigation, mapping, or object recognition []. The overall discussion of this system is given in Figure 1.

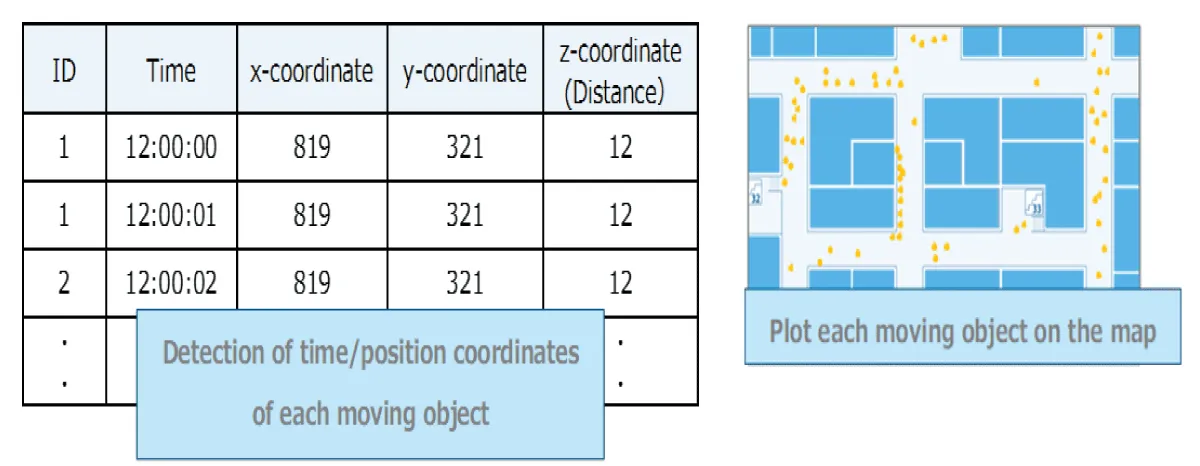

Principle of Operation: Unlike traditional radar systems that use electromagnetic waves [], 3D LiDAR relies on laser beams to measure distances and create comprehensive maps. The system emits laser pulses and calculates the time it takes for these pulses to travel to an object and back, determining the distance with high accuracy. By scanning the surrounding area with these laser pulses [], 3D LiDAR constructs a detailed and dynamic [] representation of the environment which is given in Figure 2.

Distance sensing: 3D LiDAR excels in accurately measuring distances to objects within its field of view, making it an ideal technology for applications [] where precision is essential.2. Real-time Mapping: The technology enables the creation of detailed three-dimensional maps of the surroundings in real-time. This mapping is crucial for applications such as autonomous navigation, environmental monitoring capability, and industrial automation []. 3.Object Tracking: 3D LiDAR systems can track the movement of objects by recording their positions in coordinates over time. This feature is particularly valuable in scenarios where the dynamic behavior of objects needs to be monitored and analyzed.4.Spatial Imaging: With its ability to capture spatial information [], 3D LiDAR allows for the visualization of the environment in three dimensions [], providing a comprehensive understanding of the surroundings.

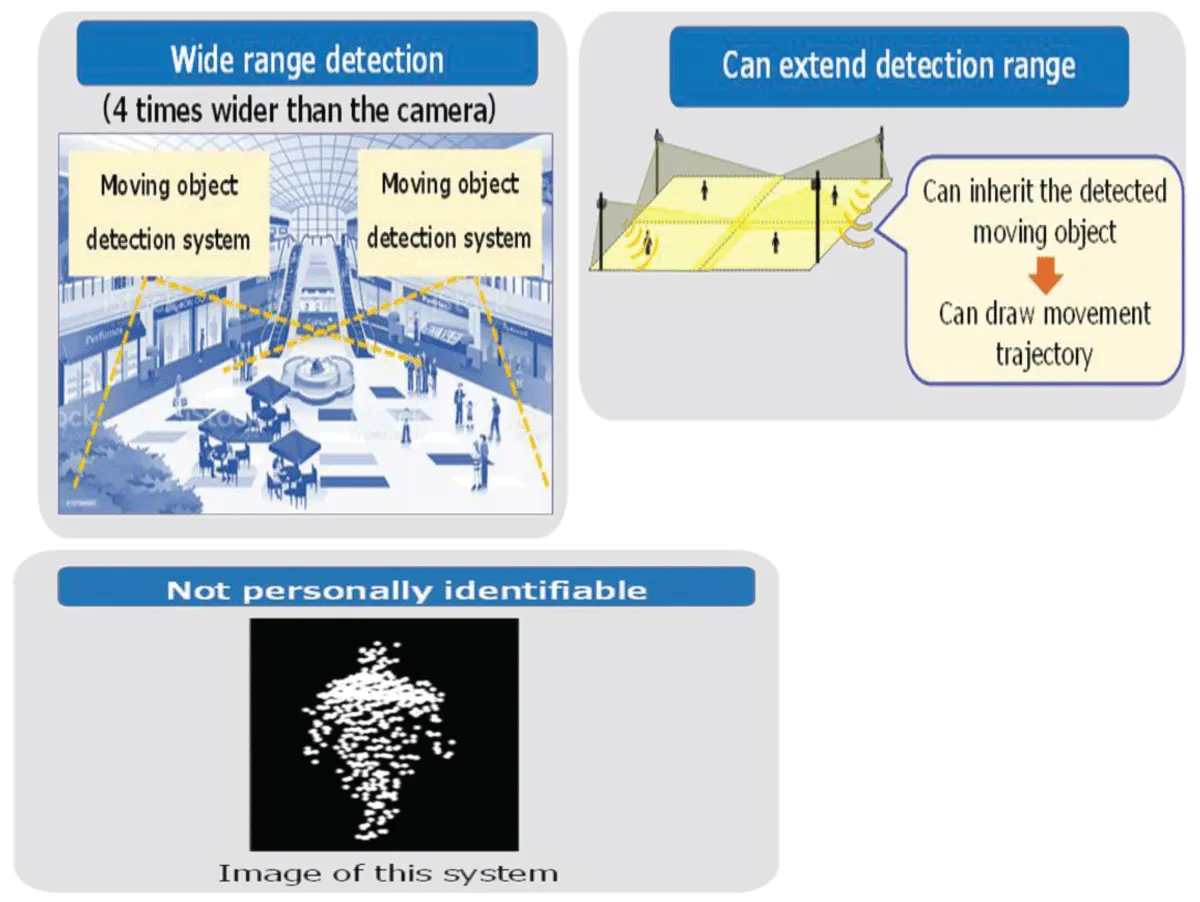

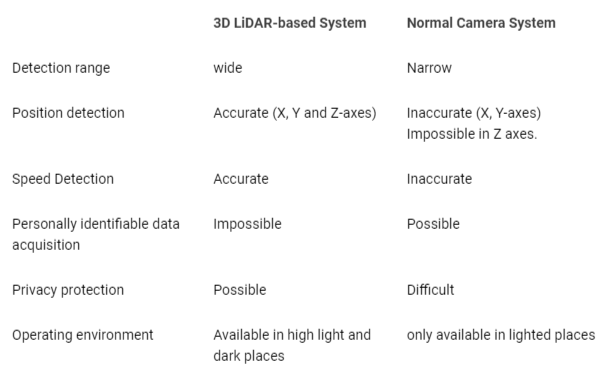

The described 3D LiDAR system boasts a wide detection range, approximately four times that of a standard camera (Table 1), and allows for scalability by installing multiple units given in Figure 3. With precise location data (±20 cm accuracy) and real-time updates (10 ms to 1 s), it efficiently tracks and detects the speed of moving objects. Importantly, it maintains privacy by not identifying individuals, making it suitable for applications such as surveillance, traffic management, industrial automation, robotics, and environmental monitoring.

Comparison with the conventional products (Table 1)

In conclusion, the “System for Detecting Moving Objects Using 3D LiDAR Technology” presents a highly effective and versatile solution for capturing and analyzing dynamic elements within a given environment. The key strengths of this system lie in its extended detection range, scalable coverage through the integration of multiple 3D LiDARs, and the provision of high-precision location data with a minimal position error of ± 20 cm. Real-time data updates, ranging from 10 milli-seconds to 1 second, enhance the system’s responsiveness to changes in the environment and the movement of objects. Furthermore, the system’s ability to not only detect moving objects but also measure their speed adds a crucial dimension to the information it provides. This makes it particularly valuable for applications where understanding the dynamics of moving objects is essential, such as in autonomous vehicles, robotics, and surveillance. Importantly, the privacy-conscious design of the system, ensuring the non-acquisition of personally identifiable data such as images, aligns with ethical considerations and privacy regulations. This design choice enhances the system’s suitability for deployment in various scenarios without compromising individual privacy.

In summary, the “System for Detecting Moving Objects Using 3D LiDAR Techno-logy” offers a comprehensive and reliable solution for real-time object detection, tracking, and data analysis. Its advanced capabilities make it well-suited for diverse applications, providing valuable insights into dynamic elements within a given environment while prioritizing precision and privacy.

The authors express their genuine gratitude to the Department of Electronics and Communication Engineering at Hajee Mohammad Danesh Science and Technology University (HSTU) in Bangladesh for their unwavering encouragement and support in the field of research.

Feng Z, Jing L, Yin P, Tian Y, Li B. Advancing self-supervised monocular depth learning with sparse LiDAR. In Conference on Robot Learning. 2022; 685-694. https://doi.org/10.48550/arXiv.2109.09628

Sinan H, Fabio R, Tim K, Andreas R, Werner H. Raindrops on the windshield: Performance assessment of camera-based object detection. In IEEE International Conference on Vehicular Electronics and Safety. 1-7; 2019. https://doi.org/10.1109/ICVES.2019.8906344

Ponn T, Kröger T, Diermeyer F. Unraveling the complexities: Addressing challenging scenarios in camera-based object detection for automated vehicles. Sensors. 2020; 20(13): 3699. https://doi.org/10.3390/s20133699

Fu XB, Yue SL, Pan DY. Scoring on the court: A novel approach to camera-based basketball scoring detection using convolutional neural networks. International Journal of Automation and Computing. 2021; 18(2):266–276. https://doi.org/10.1007/s11633-020-1259-7

Lee J, Hwang KI. Adaptive frame control for real-time object detection: YOLO's evolution. Multimedia Tools and Applications. 2022; 81(25): 36375–36396. https://doi.org/10.1007/s11042-021-11480-0

Meyer GP, Laddha A, Kee E, Vallespi-Gonzalez C, Wellington CK. Lasernet: Efficient probabilistic 3D object detection for autonomous driving. In IEEE Conference on Computer Vision and Pattern Recognition. 2019; 12677–12686. https://doi.org/10.1109/CVPR.2019.01296

Shi S, Wang X, Li H. Pointrcnn: 3D object proposal generation and detection from point cloud. In IEEE Conference on Computer Vision and Pattern Recognition. 2019; 770–779. https://doi.org/10.1109/CVPR.2019.00086

Ye M, Xu S, Cao T. Hvnet: Hybrid voxel network for LiDAR-based 3D object detection. In IEEE Conference on Computer Vision and Pattern Recognition. 2020; 1631–1640. https://doi.org/10.1109/CVPR42600.2020.00170

Ye Y, Chen H, Zhang C, Hao X, Zhang Z. Sarpnet: Shape attention regional proposal network for LiDAR-based 3D object detection. Neurocomputing. 2020; 379: 53-63. https://doi.org/10.1016/j.neucom.2019.09.086

Fan L, Xiong X, Wang F, Wang N, Zhang Z. Rangedet: In defense of range view for LiDAR-based 3D object detection. In IEEE International Conference on Computer Vision. 2021; 2918–2927. https://doi.org/10.1109/ICCV48922.2021.00291

Hu H, Cai Q, Wang D, Lin J, Sun M, Krahenbuhl P, Darrell T, Yu F. Joint monocular 3D vehicle detection and tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019; 5390–5399.

Kuhn H. The Hungarian method for the assignment problem. Naval Research Logistics (NRL). 2005; 52(1):7–21.

Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2012; 3354–3361.

Wang S, Sun Y, Liu C, Liu M. Pointtracknet: An end-to-end network for 3D object detection and tracking from point clouds. IEEE Robotics and Automation Letters. 2020; 5:3206–3212.

Bernardin K, Stiefelhagen R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. EURASIP Journal on Image and Video Processing. 2008; 246309.

Luiten J, Os Ep AA, Dendorfer P, Torr P, Geiger A, Leal-Taixé L, Leibe B. HOTA: A Higher Order Metric for Evaluating Multi-object Tracking. Int J Comput Vis. 2021;129(2):548-578. doi: 10.1007/s11263-020-01375-2. Epub 2020 Oct 8. PMID: 33642696; PMCID: PMC7881978.

Wang S, Cai P, Wang L, Liu M. DITNET: End-to-end 3D object detection and track ID assignment in spatio-temporal world. IEEE Robotics and Automation Letters. 2021; 6:3397–3404.

Luiten J, Fischer T, Leibe B. Track to reconstruct and reconstruct to track. IEEE Robotics and Automation Letters. 2020; 5:1803–1810.

Khader M, Cherian S. An Introduction to Automotive LIDAR. Texas Instruments. 2020; https://www.ti.com/lit/wp/slyy150a/slyy150a.pdf

Tian Z, Chu X, Wang X, Wei X, Shen C. Fully Convolutional One-Stage 3D Object Detection on LiDAR Range Images. 2022. arXiv preprint arXiv:2205.13764.

Chen X, Ma H, Wan J, Li B, Xia T. Multi-view 3D object detection network for autonomous riving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017; 1907–1915.

Ku J, Mozifian M, Lee J, Harakeh A, Waslander SL. Joint 3D proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 2018; 1–8.

Zhou Y, Tuzel O. Voxelnet: End-to-end learning for point cloud-based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018; 4490–4499.

Qi CR, Liu W, Wu C, Su H, Guibas LJ. Frustum PointNets for 3D object detection from RGB-D data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018; 918–927.

Cao P, Chen H, Zhang Y, Wang G. Multi-view frustum PointNet for object detection in autonomous driving. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP). 2019; 3896–3899.

Qi CR, Su H, Mo K, Guibas LJ. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017; 652–660.

Qi CR, Yi L, Su H, Guibas LJ. PointNet++: Deep hierarchical feature learning on point sets in a metric space. arXiv preprint arXiv:1706.02413. 2017.

Shi S, Wang X, Li H. Pointrcnn: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019; 770–779.

Sun X, Wang S, Wang M, Cheng SS, Liu M. An advanced LiDAR point cloud sequence coding scheme for autonomous driving. In Proceedings of the 28th ACM International Conference on Multimedia. 2020; 2793–2801.

Sun X, Wang M, Du J, Sun Y, Cheng SS. A Task-Driven Scene-Aware LiDAR Point Cloud Coding amework for Autonomous Vehicles. IEEE Transactions on Industrial Informatics. 1–11. 2022. https://doi.org/10.1109/TII.2022.3168235

Rana MD, Nisha OR, Hossain MD, Hossain MD, Hasan MD, Moon MAM. System for Detecting Moving Objects Using 3D Li-DAR Technology. IgMin Res. 15 Apr, 2024; 2(4): 213-217. IgMin ID: igmin167; DOI:10.61927/igmin167; Available at: igmin.link/p167

Anyone you share the following link with will be able to read this content:

1Department of Electronics and Communication Engineering, Hajee Mohammad Danesh Science and Technology University, Dinajpur-5200, Bangladesh

2Department of Pharmacy, World University of Bangladesh, Bangladesh

Address Correspondence:

Md Milon Rana, Department of Electronics and Communication Engineering, Hajee Mohammad Danesh Science and Technology University, Dinajpur-5200, Bangladesh, Email: [email protected]

How to cite this article:

Rana MD, Nisha OR, Hossain MD, Hossain MD, Hasan MD, Moon MAM. System for Detecting Moving Objects Using 3D Li-DAR Technology. IgMin Res. 15 Apr, 2024; 2(4): 213-217. IgMin ID: igmin167; DOI:10.61927/igmin167; Available at: igmin.link/p167

Copyright: © 2024 Rana MM, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Figure 1: Basic Structure of 3D LiDAR System....

Figure 1: Basic Structure of 3D LiDAR System....

Figure 2: Detection of time in coordinates of each moving ob...

Figure 2: Detection of time in coordinates of each moving ob...

Figure 3: Detection Range and Output....

Figure 3: Detection Range and Output....

Table 1: Comparison with other products...

Table 1: Comparison with other products...

Feng Z, Jing L, Yin P, Tian Y, Li B. Advancing self-supervised monocular depth learning with sparse LiDAR. In Conference on Robot Learning. 2022; 685-694. https://doi.org/10.48550/arXiv.2109.09628

Sinan H, Fabio R, Tim K, Andreas R, Werner H. Raindrops on the windshield: Performance assessment of camera-based object detection. In IEEE International Conference on Vehicular Electronics and Safety. 1-7; 2019. https://doi.org/10.1109/ICVES.2019.8906344

Ponn T, Kröger T, Diermeyer F. Unraveling the complexities: Addressing challenging scenarios in camera-based object detection for automated vehicles. Sensors. 2020; 20(13): 3699. https://doi.org/10.3390/s20133699

Fu XB, Yue SL, Pan DY. Scoring on the court: A novel approach to camera-based basketball scoring detection using convolutional neural networks. International Journal of Automation and Computing. 2021; 18(2):266–276. https://doi.org/10.1007/s11633-020-1259-7

Lee J, Hwang KI. Adaptive frame control for real-time object detection: YOLO's evolution. Multimedia Tools and Applications. 2022; 81(25): 36375–36396. https://doi.org/10.1007/s11042-021-11480-0

Meyer GP, Laddha A, Kee E, Vallespi-Gonzalez C, Wellington CK. Lasernet: Efficient probabilistic 3D object detection for autonomous driving. In IEEE Conference on Computer Vision and Pattern Recognition. 2019; 12677–12686. https://doi.org/10.1109/CVPR.2019.01296

Shi S, Wang X, Li H. Pointrcnn: 3D object proposal generation and detection from point cloud. In IEEE Conference on Computer Vision and Pattern Recognition. 2019; 770–779. https://doi.org/10.1109/CVPR.2019.00086

Ye M, Xu S, Cao T. Hvnet: Hybrid voxel network for LiDAR-based 3D object detection. In IEEE Conference on Computer Vision and Pattern Recognition. 2020; 1631–1640. https://doi.org/10.1109/CVPR42600.2020.00170

Ye Y, Chen H, Zhang C, Hao X, Zhang Z. Sarpnet: Shape attention regional proposal network for LiDAR-based 3D object detection. Neurocomputing. 2020; 379: 53-63. https://doi.org/10.1016/j.neucom.2019.09.086

Fan L, Xiong X, Wang F, Wang N, Zhang Z. Rangedet: In defense of range view for LiDAR-based 3D object detection. In IEEE International Conference on Computer Vision. 2021; 2918–2927. https://doi.org/10.1109/ICCV48922.2021.00291

Hu H, Cai Q, Wang D, Lin J, Sun M, Krahenbuhl P, Darrell T, Yu F. Joint monocular 3D vehicle detection and tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019; 5390–5399.

Kuhn H. The Hungarian method for the assignment problem. Naval Research Logistics (NRL). 2005; 52(1):7–21.

Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2012; 3354–3361.

Wang S, Sun Y, Liu C, Liu M. Pointtracknet: An end-to-end network for 3D object detection and tracking from point clouds. IEEE Robotics and Automation Letters. 2020; 5:3206–3212.

Bernardin K, Stiefelhagen R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. EURASIP Journal on Image and Video Processing. 2008; 246309.

Luiten J, Os Ep AA, Dendorfer P, Torr P, Geiger A, Leal-Taixé L, Leibe B. HOTA: A Higher Order Metric for Evaluating Multi-object Tracking. Int J Comput Vis. 2021;129(2):548-578. doi: 10.1007/s11263-020-01375-2. Epub 2020 Oct 8. PMID: 33642696; PMCID: PMC7881978.

Wang S, Cai P, Wang L, Liu M. DITNET: End-to-end 3D object detection and track ID assignment in spatio-temporal world. IEEE Robotics and Automation Letters. 2021; 6:3397–3404.

Luiten J, Fischer T, Leibe B. Track to reconstruct and reconstruct to track. IEEE Robotics and Automation Letters. 2020; 5:1803–1810.

Khader M, Cherian S. An Introduction to Automotive LIDAR. Texas Instruments. 2020; https://www.ti.com/lit/wp/slyy150a/slyy150a.pdf

Tian Z, Chu X, Wang X, Wei X, Shen C. Fully Convolutional One-Stage 3D Object Detection on LiDAR Range Images. 2022. arXiv preprint arXiv:2205.13764.

Chen X, Ma H, Wan J, Li B, Xia T. Multi-view 3D object detection network for autonomous riving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017; 1907–1915.

Ku J, Mozifian M, Lee J, Harakeh A, Waslander SL. Joint 3D proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 2018; 1–8.

Zhou Y, Tuzel O. Voxelnet: End-to-end learning for point cloud-based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018; 4490–4499.

Qi CR, Liu W, Wu C, Su H, Guibas LJ. Frustum PointNets for 3D object detection from RGB-D data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018; 918–927.

Cao P, Chen H, Zhang Y, Wang G. Multi-view frustum PointNet for object detection in autonomous driving. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP). 2019; 3896–3899.

Qi CR, Su H, Mo K, Guibas LJ. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017; 652–660.

Qi CR, Yi L, Su H, Guibas LJ. PointNet++: Deep hierarchical feature learning on point sets in a metric space. arXiv preprint arXiv:1706.02413. 2017.

Shi S, Wang X, Li H. Pointrcnn: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019; 770–779.

Sun X, Wang S, Wang M, Cheng SS, Liu M. An advanced LiDAR point cloud sequence coding scheme for autonomous driving. In Proceedings of the 28th ACM International Conference on Multimedia. 2020; 2793–2801.

Sun X, Wang M, Du J, Sun Y, Cheng SS. A Task-Driven Scene-Aware LiDAR Point Cloud Coding amework for Autonomous Vehicles. IEEE Transactions on Industrial Informatics. 1–11. 2022. https://doi.org/10.1109/TII.2022.3168235