A Survey of Motion Data Processing and Classification Techniques Based on Wearable Sensors

Machine Learning BioengineeringBiomedical EngineeringReceived 13 Nov 2023 Accepted 01 Dec 2023 Published online 04 Dec 2023

ISSN: 2995-8067 | Quick Google Scholar

Next Full Text

Roadmap for Renewable Energy Development

Previous Full Text

The Educational Role of Cinema in Physical Sciences

Received 13 Nov 2023 Accepted 01 Dec 2023 Published online 04 Dec 2023

The rapid development of wearable technology provides new opportunities for action data processing and classification techniques. Wearable sensors can monitor the physiological and motion signals of the human body in real-time, providing rich data sources for health monitoring, sports analysis, and human-computer interaction. This paper provides a comprehensive review of motion data processing and classification techniques based on wearable sensors, mainly including feature extraction techniques, classification techniques, and future development and challenges. First, this paper introduces the research background of wearable sensors, emphasizing their important applications in health monitoring, sports analysis, and human-computer interaction. Then, it elaborates on the work content of action data processing and classification techniques, including feature extraction, model construction, and activity recognition. In feature extraction techniques, this paper focuses on the content of shallow feature extraction and deep feature extraction; in classification techniques, it mainly studies traditional machine learning models and deep learning models. Finally, this paper points out the current challenges and prospects for future research directions. Through in-depth discussions of feature extraction techniques and classification techniques for sensor time series data in wearable technology, this paper helps promote the application and development of wearable technology in health monitoring, sports analysis, and human-computer interaction.

Index Terms: Activity recognition, Wearable sensor, Feature extraction, Classification

The rapid development of technology and the continuous progress of society have made wearable technology one of the most concerned fields in recent years [-]. Wearable devices such as smart watches, smart glasses, health trackers, and smart clothing have gradually integrated into our lives, not only providing us with many conveniences but also giving us more opportunities to obtain data about ourselves and our surrounding environment. Sensors in these devices collect various types of data, including images, sounds, motions, physiological parameters, time series data, etc. [,]. Among them, time series data is a particularly challenging type of data because they usually contain a large amount of time series information and require complex processing and analysis techniques to extract useful information.

The rise of wearable sensor technology has greatly benefited from the continuous progress of sensors and the improvement of computing power and storage capacity. Modern wearable sensors are capable of capturing multiple data types, including acceleration, gyroscope, heart rate, skin conductance, temperature, and so on. These sensors monitor various physiological and environmental parameters of the human body in a non-invasive manner, providing strong support for applications such as health monitoring, sports analysis, and the improvement of quality of life []. However, the data generated by these sensors is typically multi-channel, high-dimensional time series data with large data volume and complexity. Therefore, the classification techniques for wearable sensor time series data have aroused widespread research interest and received extensive attention in both academia and industry. The efficient processing and analysis of these data to achieve accurate Human Activity Recognition (HAR) has become a challenge.

In the context of wearable sensor data, HAR is an important application area that involves associating data captured by sensors with specific activities or states. Depending on the data type, HAR can generally be divided into two categories: HAR for video data [-] and HAR for wearable sensor data [,].

The method of wearable sensor-based action classification involves using embedded sensors to monitor human movement and physiological parameters. These sensors typically include accelerometers, gyroscopes, heart rate sensors, temperature sensors, etc. By capturing the output of these sensors, multi-channel, high-dimensional data can be constructed for the analysis and classification of different human activities []. Camera-based action classification methods use cameras or depth cameras to capture visual information about human movement. These methods typically involve computer vision and image processing techniques to enable real-time monitoring and classification of human posture, and activities [,].

Compared to HAR based on wearable sensor data, applications based on video data face multiple challenges. These challenges include the potential risk of user privacy leakage, the fixed position of the camera, the ease with which users can walk out of the camera range, and the large storage requirements for video data [,]. Therefore, HAR based on video data has a high demand for data storage and computing resources. In contrast, HAR based on sensor data can effectively circumvent these issues. In addition, the rapid development of mobile terminal hardware technology and Internet of Things technology has made wearable devices more compact, easy to carry, and easy to operate. These devices can be specially designed wearable device sensors for specific tasks, or sensors built into common mobile devices such as smartphones, smart bracelets, game consoles, smart clothing, etc. Compared to video data streams, the volume of sensor data is much smaller. Despite the continuous generation of data by sensors, the network transmission cost and computing cost are relatively low, making it easy to achieve real-time computing. The improvement of the computing power of intelligent terminal devices and the extensive application of remote big data cloud computing platforms have greatly increased the computing speed of applications based on wearable sensors, enabling them to respond to user needs more quickly. Especially in recent years, the development of edge computing has made data processing more efficient. More importantly, sensor data can effectively protect user privacy and reduce the infringement on users’ individual characteristics.

Therefore, with the rapid development of technologies such as hardware devices, mobile computing, and artificial intelligence Internet of Things, the HAR applications oriented towards wearable sensor data have gradually increased and been widely applied in daily life, including multiple fields such as health monitoring, entertainment, industry, military, and social interaction [,,].

Health and medical care [,]. Wearable technology holds great potential in health monitoring. The emergence of smartwatches, health trackers, and medical devices allows people to monitor vital signs in real-time, such as heart rate, blood pressure, blood oxygen saturation, and body temperature. This real-time data monitoring can alert medical issues in advance and help patients better manage chronic diseases like diabetes and high blood pressure. At the same time, wearable technology is also used in surgical procedures to track patients’ vital signs, enhancing surgical safety.

Fitness and sports training [-]. Wearable technology has become a valuable assistant for fitness and sports enthusiasts. Smartwatches and sports trackers can monitor exercise data, such as steps, calorie consumption, and sleep quality. This is valuable information for trainers, helping them adjust their training plans to improve effectiveness. In the professional field, athletes can use wearable technology to optimize skills and monitor physical fitness.

Entertainment and gaming [,]. Wearable technology offers new possibilities for entertainment forms such as Virtual Reality (VR) and Augmented Reality (AR). VR headsets and AR glasses have been widely used in gaming, virtual travel, and training. They can provide an immersive experience, directly integrating players and users into the virtual world.

Production and industrial fields [,]. In the manufacturing and industrial fields, wearable technology helps to improve efficiency and safety. For example, smart glasses can provide real-time information and guidance to workers, helping them complete complex tasks. Sensors embedded in workwear can monitor the posture and movements of employees to prevent injuries.

In conclusion, wearable technology has profoundly changed our way of life, providing more convenience, safety, and interactivity. Their application in multiple fields continues to drive technological innovation, indicating that we will continue to see more exciting development and improvement in the future. The continuous progress of these technologies will bring more possibilities to our lives, promoting the development of health, entertainment, productivity, and social interaction.

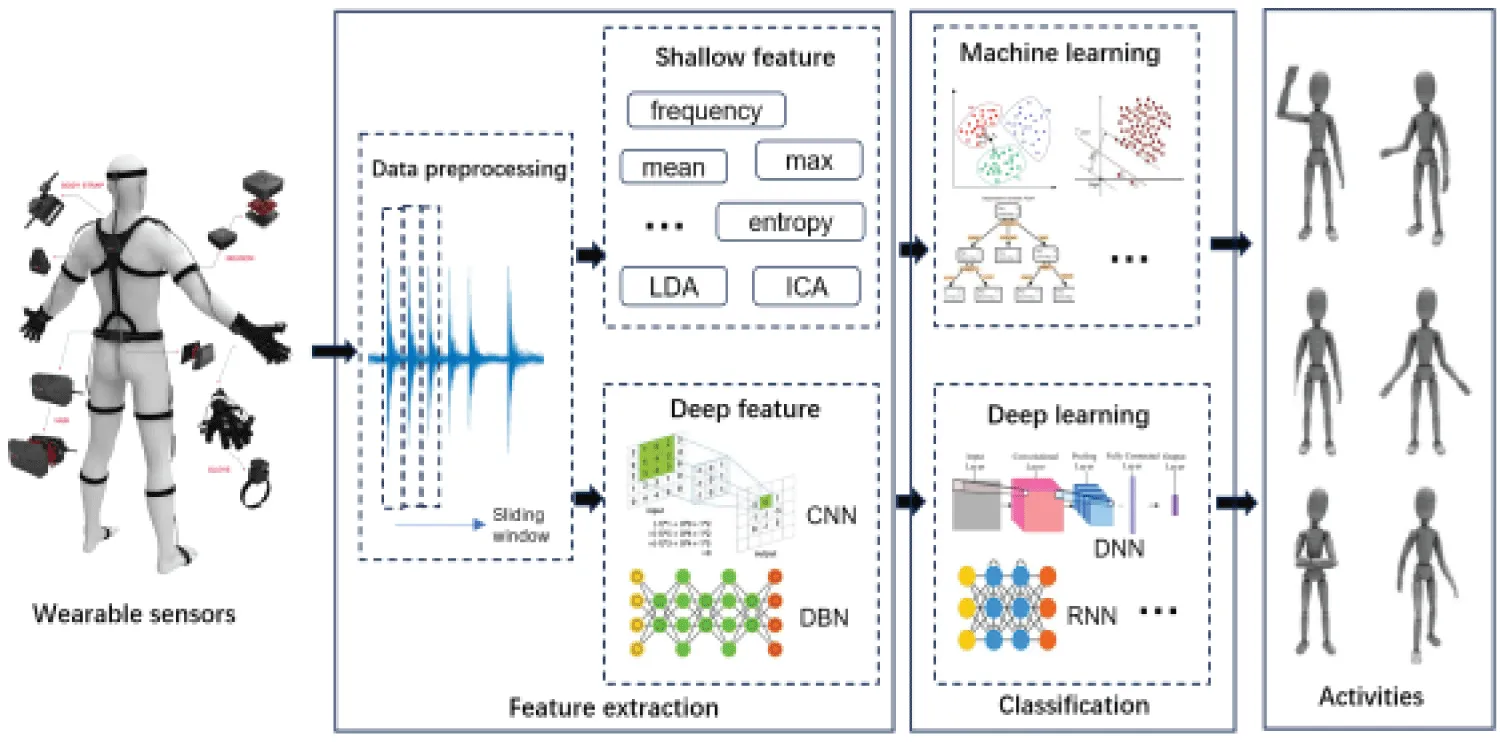

Generally, the classification technology of wearable sensor time series data is summarized as the HAR basic framework as shown in Figure 1, which mainly includes three core modules, namely feature extraction, model construction, and activity recognition. By learning the feature vectors of human activities and actions from wearable sensor time series data through feature extraction, models are learned and constructed according to different application requirements, and user action categories are identified in practical applications using trained models. Based on this, this paper focuses on a comprehensive review of the development of classification technology for wearable sensor time series data, focusing on the feature extraction technology of wearable sensor time series data, time series classification models, and current challenges, in order to help researchers better understand the current situation and future development trends in this field.

The goal of feature extraction is to find features that can express different activities so that they can be used for action discrimination in sensor data classification techniques. This field is currently one of the research hotspots. A good feature expression has a significant impact on the classification accuracy of the model. Sensor data, mainly from triaxial inertial sensors such as accelerometers, gyroscopes, and magnetometers, are used to construct these features.

As shown in Figure 1, preprocessing of the original data is a necessary step before constructing the feature space, aimed at reducing noise data in the original data and slicing time-series data according to the window size. First, the original data is processed through a filter to reduce noise points and normalize the data within a certain period of time. Next, the data is segmented according to a fixed window size, and the time-series sensor data within each window is regarded as a processing sample unit for activity recognition []. Typically, longer-lasting actions use larger time windows, while shorter actions use smaller time windows. The size of the sliding window is typically determined by the activity category and the data sampling frequency. A large window may contain multiple short-term activity categories, while a small window may result in incomplete actions [,].

According to the way features are generated, features in the HAR framework can be divided into two categories: traditional features and deep features [,]. Shallow features are features manually extracted based on statistical methods, while deep features are features automatically extracted based on neural networks, etc.

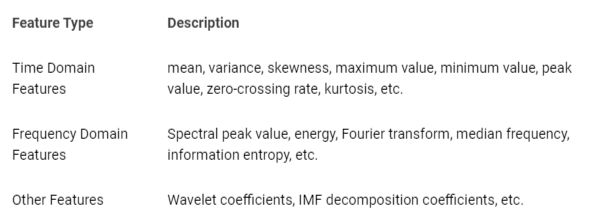

The traditional features mainly rely on empirical knowledge and require artificial extraction of statistical significance feature vectors from the partitioned data, including time-domain features [], frequency-domain features [], and other features [,]. Among them, time-domain features mainly include mean, variance, standard deviation, skewness, kurtosis, quartiles, mean absolute deviation, and correlation coefficients between axes; frequency-domain features mainly include peaks, energy, and discrete cosine transform of the discrete Fourier transform spectrum; wavelet features are approximate wavelet coefficients and detailed wavelet coefficients obtained after discrete wavelet transform and these features are shown in Table 1.

The method of manual feature extraction is explanatory, easy to understand, and low in computational cost. However, for complex actions, the expressiveness of traditional features is limited, which affects the recognition effect of the model. In the research on human action recognition based on wearable sensors, researchers have used various feature extraction methods and classification techniques to solve this problem. The following are some representative research methods: Altim, et al. [] used time-domain features such as mean, variance, skewness, kurtosis, and the correlation coefficients between axes, and compared the performance of different classification techniques. Zhang, et al. [] extracted time-domain features such as mean, quartiles, and skewness, and used sparse representation algorithms for action classification. Bao, et al. [] used time-domain features such as mean, correlation coefficients between axes, and peaks and energies of the Discrete Fourier Transform spectrogram to identify 20 daily actions. Altun, et al. [] used frequency-domain features based on Discrete Cosine Transform for action classification in another study. Khan, et al. [] used autoregressive models and extracted features such as autoregressive coefficients and the inclination angle of the body for action recognition.

After extracting these features, researchers usually face a high-dimensional feature vector, which may lead to high computational complexity, insufficient data intuitiveness, and poor visibility. Therefore, feature dimensionality reduction is necessary to eliminate redundant information in the data. In sensor-based human action recognition research, common feature dimensionality reduction methods include Principal Component Analysis (PCA) [-], Linear Discriminant Analysis (LDA) [,], and Independent Component Analysis (ICA) [,], etc.

The core idea of PCA [-] is to project high-dimensional data into a low-dimensional space while preserving as much information as possible. This is achieved by calculating the covariance matrix and eigenvalues, and then arranging the feature vectors based on the magnitude of the eigenvalues. By setting the threshold of the cumulative variance contribution rate, a few feature vectors can be selected to map high-dimensional data into low-dimensional space. For example, Altun, et al. [] used PCA to reduce the dimensionality from 1170 dimensions to 30 dimensions.

The goal of LDA [,] is to find the best projection direction so that samples of the same category are as close as possible and samples of different categories are as far apart as possible. Compared to PCA, LDA is a supervised learning method. For example, Khan, et al. [] used LDA for feature dimensionality reduction, thereby improving the accuracy of action recognition.

ICA [,] is an analysis method based on the high-order statistical characteristics of signals, which reduces dimensions by finding projection directions that make data independent. ICA is suitable for non-Gaussian data and seeks mutually independent projection directions. For example, Mantyjarvi, et al. [] used the ICA method and combined it with neural networks for action classification.

These feature extraction and dimension reduction methods play a key role in human action recognition with wearable sensor technology, helping to improve the accuracy and efficiency of classification. Researchers choose appropriate methods according to specific application scenarios and data types to achieve the best recognition performance.

Deep features refer to the use of neural networks or deep learning techniques to automatically extract more complex features from pretreated data, reducing the need for manual intervention. Compared to traditional features, deep learning features have better robustness and generalization, and can effectively cope with problems of within-class differences and between-class similarity. Convolutional Neural Networks (CNN) is a widely studied feature extraction method in the field of deep learning, successfully overcoming the challenges of numerous parameters and difficult feature preservation in image processing. Without the need for domain-specific expertise, CNN can automatically extract high-resolution features and has been successfully applied to image recognition and speech recognition, among others, achieving outstanding research results. In recent years, CNN has also made significant progress in the field of HAR based on temporal data collected by sensors. Unlike image raw data, sensor data is one-dimensional time series data and cannot directly be used by CNN to extract features of different activities, thus requiring appropriate modifications to the data or model. Literature [] is the first to apply CNN to the study of HAR based on sensor data, describing the basis and advantages of applying CNN in the field of activity recognition from the perspectives of local dependence and scale invariance. Local dependence means that data nearby at the same time in time series data has a strong correlation, similar to the correlation between adjacent pixels in the same image. CNN can capture the local dependence of data from different activity signal sources. Scale invariance refers to the ability of image data to maintain its features after being scaled at different scales. In time series data, the same category of actions may appear at different time cycles, and CNN can capture the features of the same category of actions without being disturbed by time variations.

In classification models based on time series data from sensors, there are mainly two types of methods for using Convolutional Neural Networks (CNNs) to extract features: data-driven and model-driven []. The data-driven type treats each dimension of the time series signal of the sensor data as an independent channel and uses one-dimensional convolutional kernels to extract features. The model-driven type, on the other hand, converts one-dimensional signals into two-dimensional signals according to certain rules and then uses two-dimensional convolutional kernels for feature extraction.

Feature extraction based on one-dimensional convolutional: The one-dimensional convolutional kernel is applied to one-dimensional time series data to extract features with time dependence. Although the types of sensors used in wearable devices are diverse, the sensor data or channel data are all one-dimensional time series data, such as the time series data of each axis representing a channel, such as each axis in an accelerometer. In most of the convolution methods of one-dimensional convolutional kernels, the input matrix is composed of the sliding window size and the total number of sensor channels, and the one-dimensional convolutional kernel is used to convolve the data on each channel. Therefore, in the case of a fixed sliding window, action features can be automatically extracted. Although the specific selection of sensors and the structure of CNN in the relevant literature are slightly different.

Literature [] regards the three-axial data of a single three-axis accelerometer as three channels, convolves and pools them along the time axis respectively, and finally combines them into the feature vector of the window, and proposes a CNN convolution method with locally shared weights. This study uses a pair of convolutional and pooling layers, so the extracted features are automatically extracted from shallow features. Literature [] also uses the original three-axis data of a single accelerometer as the input of the convolutional network but changes the size of the convolutional kernel to adapt to the characteristics of the three-axis acceleration signal. Similarly, literature [] uses three-axis acceleration data collected by a wrist sensor to identify volleyball actions. Literature [] uses the three-axis data of an accelerometer and the three-axis data of a gyroscope to form six channels, processes the input using one-dimensional convolutional kernels, and extracts deep features through multiple convolutional and pooling layers to improve the recognition accuracy. In literature [], not only the three-axis accelerometer is used, but also various sensor data is used, extending the three channels to multi-channels, and using two convolutional layers and pooling layers of one-dimensional convolutional kernels to extract features. Literature [] combines CNN and Long Short-Term Memory (LSTM) networks to learn deep learning models, using multi-layer one-dimensional convolutional kernels to automatically extract features without using pooling layers, and then using LSTM for classification and recognition, achieving excellent results on the general data set.

The one-dimensional convolutional kernel shows excellent mining ability of time-dependent dependencies in the feature extraction process. However, it usually ignores the spatial dependencies between different categories of sensors or between the different axial data of the same sensor, which has a negative impact on the recognition effect of human actions.

Feature extraction based on two-dimensional convolutional: Due to the limitations of the one-dimensional convolutional kernel in processing time-series data from sensors, model-driven methods are used to reorganize the original data and generate an image-like input matrix, referred to as an action picture. The matrix is then processed using two-dimensional convolutional kernels and corresponding pooling operations to extract spatiotemporal dependencies. After feature extraction, different learning models are applied. Literature [] considers the original multi-channel data as an image, uses two-dimensional convolutional kernels to convolve the entire image, and generates a one-dimensional feature mapping. Then, a one-dimensional convolutional kernel is applied again to the one-dimensional feature mapping generated in the first layer. Literature [] arranges the same type of sensor data from different positions in the three axes and uses zero matrices to separate them, so that all axial data information is included in the same action picture. On this input structure, a two-dimensional convolutional kernel is used to combine the temporal and spatial dependencies. On the basis of the two-dimensional convolutional kernel in Literature [], Literature [] realizes two convolutional neural network structures, namely local weight sharing and global weight sharing. Literature [] converts one-dimensional original signal data into spectral data by Fourier transform, generates spectra for the three-axis accelerometer and the three-axis gyroscope signals, and then uses the spectra as input for convolutional operations. Similarly, Literature [] proposes an algorithm that converts one-dimensional signals into two-dimensional image signals, enabling each channel signal to be connected to other channel signals, even if they are of different types, and enabling dependencies between every two signals to be extracted. Literature [] modifies the input image construction algorithm and adjusts the (x, y, z) axis data arrangement order of different types of three-axis sensors at the same time, ensuring that all different types of sensors data are adjacent at this moment, and the data of the same type of sensors in different axial directions are also adjacent.

Compared with the one-dimensional convolutional kernel that only considers the temporal dependency, the two-dimensional convolutional kernel provides more application angles to make greater use of the spatial dependency of the sensor data. The human action recognition model based on two-dimensional convolutional kernels relies on the organizational structure of the input data, but existing research only uses simple and plain channel arrangement methods to process different sensor data, and then directly performs two-dimensional convolutions, or roughly combines data from the same position in the three axes. Although the existing two-dimensional convolutional methods based on convolutional kernels show better performance in improving evaluation indicators such as accuracy and precision compared to traditional HAR methods based on statistical features or one-dimensional convolutional kernels, they fail to reasonably and fully utilize the characteristics of the three-axis sensor data at different positions, or to tap the potential of spatial dependencies.

Building classification models is another core research issue. The input of the model is the feature extracted from the original data, and the output is the label of these input features. The existing classification models based on sensor time series data can be divided into two categories according to the learning principle and feature category, namely traditional classification models and deep learning models.

The traditional classification techniques can be mainly divided into two categories: single-instance-based methods and sequence-based recognition methods.

The single-instance-based methods, such as Least Squares Method (LSM) [], K-Nearest Neighbor (K-NN) [], Decision Tree (DT) [,], Threshold-based Classification [], Naive Bayes (NB) [,], Dynamic Time Warping (DTW) [,], Support Vector Machine (SVM) [,], and Artificial Neural Networks (ANN) [,], etc. These methods typically independently assign labels to each individual feature vector, independent of the previous action classification situation.

LSM [] works by first calculating the average reference vector for each category, then subtracting each test vector from these average vectors and calculating the vector norm between them, which is the distance. Finally, the label is assigned to the test vector based on the minimum distance. LSM is a relatively simple classifier because it only requires the use of average vectors, so it has low storage requirements, short execution time, and no need for prior training. Therefore, it is typically applied to real-time action recognition systems.

K-NN [] is a classic pattern classification algorithm that has been widely used in human action recognition research. The core idea is to calculate the distance between the test vector and each sample vector, then assign the test vector to the category with the most identical labels to the sample vectors with the most labels. Although K-NN does not require training during classification, it requires storage of all sample vectors and calculation of the distance between the test vector and all samples, resulting in increased computational complexity when processing large amounts of data.

DT [,] is a tree-like structure where each leaf node represents a category and each branch represents the output of a feature. The classification process starts from the root node by establishing multiple levels of decisions. Studies have shown that DT can be used to evaluate the effectiveness of multi-sensor action recognition systems.

NB [,] is a classification algorithm based on Bayes’ theorem, which assumes independence between features. In human action recognition research, NB is used to calculate the probability density function (Probability Density Function, PDF) of features and the posterior probability of the test sample belonging to different categories.

DTW [,] is a method for judging the similarity between two sequences and finding the best match between two given sequences under certain constraints. In DTW-based HAR, the test vector is compared with all stored reference feature vectors to find its minimum cost warping path.

SVM [,] is a classical pattern recognition method, mainly used to solve problems such as small sample and high-dimensional data classification. It realizes classification tasks by constructing the optimal hyperplane in the feature space. However, it should be noted that SVM algorithms usually require relatively long training time in the model training stage.

ANN [,] is a computational model inspired by the structure of brain neurons, including an input layer, one or more hidden layers, and an output layer. ANN performs model training through the connection of weights and thresholds and is able to calculate the boundaries of the decision region.

The sequence-based recognition method requires considering past classifications to determine the label of the current feature vector. Such methods include Hidden Markov Model (HMM) [-], Gaussian Mixture Model (GMM) [,,], etc. They are more suitable for processing time series data because they can consider the correlations between data, thereby improving the accuracy of classification.

HMM [-] is a sequence-based generative learning algorithm. In action recognition, the hidden state of HMM corresponds to different human actions, and the observed state corresponds to the feature vectors extracted from the data. Each hidden state outputs an observation value in the form of probabilities, and the transitions between hidden states also have a certain probability.

GMM [,] is an unsupervised probabilistic model commonly used to solve the clustering problem of data, especially in medical statistics and action recognition. The parameters of GMM are typically solved by the Expectation Maximization (EM) algorithm. However, it is important to note that the solution of GMM involves the EM algorithm, which may result in the risk of falling into a local optimum solution.

By providing a detailed description of these classifiers and methods, researchers can better understand their characteristics, application fields, and potential advantages and disadvantages, thereby better selecting the appropriate classifier for their research needs. This helps to improve the application effect of wearable sensor technology in human action recognition.

In recent years, deep learning techniques have achieved a series of remarkable research results in the field of HAR [-]. Deep learning networks are defined as multi-layer neural networks and are currently one of the most widely used models in HAR models, including convolutional networks [-], recurrent neural networks, etc. [-]. Deep learning HAR models build classification models by learning deep features, mainly divided into three categories [], namely generative deep learning models, discriminative deep learning models, and hybrid deep learning models. Generative deep learning models include autoencoders and recurrent neural networks, and HAR based on long short-term memory recurrent networks (Long Short-Term Memory, LSTM) is a typical example [,]. Discriminative deep learning models mainly use convolutional neural networks and deep fully connected networks. With the increase in the dimensionality of HAR source data and the complexity of data, increasing the number of hidden layers and feature widths in the network can effectively extract more representative features. Compared to fully connected networks, convolutional neural networks reduce the number of parameters by sharing parameters, and have the advantages of local dependence and scale invariance, which can effectively extract the spatiotemporal features of wearable sensor time series data. Hybrid deep learning models combine and connect the two models mentioned above. For example, convolutional neural networks can be connected to recurrent neural networks [], where convolutional neural networks are mainly used for feature extraction, while recurrent neural networks are used to implement classification. Similarly, convolutional neural networks can also be used in combination with Boltzmann machines [].

Comprehensively examining existing models, they can be classified into supervised learning, unsupervised learning, and weakly Supervised Learning models from the perspective of the learning tasks of the model, the amount of data, and the quality of labels.

Supervised learning: Supervised learning requires high-quality labels for the training data and also ensures that each category has sufficient data volume. In sensor data classification models, most learning tasks belong to supervised learning, and good classification performance has been achieved.

Unsupervised learning: In unsupervised learning, most models employ pre-training methods to guide discriminative training and improve generalization performance in processing unlabeled data. Once effective feature extraction capability is obtained, a common practice is to combine supervised learning methods for network fine-tuning. Some widely adopted unsupervised pre-training algorithms include restricted Boltzmann machines [-], autoencoders [,], and their variants [,], etc. For example, reference [] investigated three unsupervised learning techniques, namely sparse autoencoders, denoising autoencoders, and principal component analysis, to learn useful features from sensor data, followed by classification using support vector machines. At present, unsupervised learning cannot independently complete the HAR task, that is, it cannot accurately identify the true labels of activities from samples without original labels. In fact, it is essentially a two-stage process, with the first stage being pre-training, which belongs to unsupervised learning, while overall, it is a semi-supervised learning method.

Weakly Supervised Learning: Unlike supervised learning and unsupervised learning, weak supervision learning covers scenarios with partially labeled sample data, including common forms such as semi-supervised learning and unsure supervision learning. In the field of semi-supervised HAR, literature [] proposed a semi-supervised deep HAR model aimed at addressing the class imbalance problem under limited labeled data. The model trained multiple classifiers on different data types through co-training to classify unlabeled data. Experiments proved that, in semi-supervised HAR models, using only 10% of labeled data could outperform supervised learning models.

Literature [] overturned the supervised learning method’s assumption of feature engineering and proposed two semi-supervised methods based on Convolutional Neural Networks (CNN) to learn discriminative features that could be obtained from labeled data, unlabeled data, and raw sensor data. Both supervised and semi-supervised learning require high-quality, correctly labeled samples as a premise. However, in practical applications, especially in the process of labeling sensor data categories, inaccurate labels often occur, thus creating the demand for unsure supervision learning.

Uncertain labels typically result from label annotation techniques. Currently, there are two main types of label annotation techniques for training data: offline annotation methods and online annotation methods []. Offline annotation methods refer to the label annotation process achieved through video, audio recording, user feedback, and direct observation of sensor data, etc. In contrast, online annotation methods involve labeling directly during the execution of an action, such as using empirical sampling methods, etc.

A common and effective learning model for solving the classification problem of uncertain labels is Multiple Instance Learning (MIL). Literature [] was the first study to apply MIL to HAR recognition, proposing a new label annotation strategy aimed at reducing the empirical sampling frequency in HAR. Furthermore, literature [] proposed a graph generation multiple instance learning HAR model based on autoregressive hidden Markov. These models belong to the traditional weak supervision learning category. It is worth noting that the MIL ideology can also be applied to deep learning models, for example, literature [] integrated the MIL ideology into the Long Short-Term Memory (LSTM) model, which can be deployed on resource-constrained microdevices for early activity recognition and monitoring.

With the rapid development of Internet of Things technology and mobile terminal technology, the technology of data classification for wearable sensors has become a very important research direction and plays a pivotal role in pervasive computing and human-computer interaction, greatly enriching people’s intelligent life and enhancing the good experience brought by technology. It has broad application prospects and research significance. The future of wearable sensor time series data classification technology is full of potential and challenges. This section will discuss some possible future development directions and challenges.

Further application of deep learning: Deep learning has made significant progress in wearable sensor data classification, but there are still many future opportunities. One of them is to explore deeper learning models to improve classification performance. The architecture of deep neural networks can be optimized to adapt to different types of time series data, including biomedical, sports, and environmental data. In addition, researchers can try to combine deep learning with other techniques, such as reinforcement learning and transfer learning, to better handle the diversity and complexity of data.

Integration of multi-modal data: Future research can focus more on the integration of multi-modal data. Multi-modal data refers to data from different sensor types or data sources, such as images, audio, physiological data, etc. Integrating multi-modal data can provide more comprehensive information and help to classify and understand user status more accurately. However, the integration of multi-modal data also brings challenges, including the alignment of data, the fusion of features, and the design of model, etc. Future research needs to further solve these technical problems.

Real-time classification and feedback: The future development of wearable sensor technology can be towards real-time classification and feedback. Real-time classification can make the system more interactive and provide instant health and behavioral advice to users. For example, in the field of health monitoring, real-time classification can help monitor heart conditions and provide emergency medical intervention in time. To achieve real-time classification, problems such as data processing and communication delays, and the protection of user privacy and data security need to be addressed.

Model interpretability: With the widespread application of complex models such as deep learning, model interpretability has become an important issue. Users usually need to understand why the system makes a specific classification decision, especially in the medical field. Future research can focus on improving model interpretability to enable users to better understand the working principle of the system and provide trust and reliability for clinicians and decision makers.

Data privacy and ethics issues: Data privacy and ethics issues will continue to be an important challenge for wearable sensor technology. Users’ personal data needs to be strictly protected, and at the same time, it is also crucial to ensure the ethical use of data in research. Future research needs to focus on how to design privacy-protected mechanisms and ethical guidelines to balance the usefulness of data and user rights.

Data annotation and dataset: Building rich and diverse datasets is crucial for advancing research. Future research can invest more effort in creating open datasets for wearable sensor data classification to allow researchers to better evaluate and compare the performance of different methods. In addition, data annotation is also a challenge and more effective annotation strategies and tools need to be developed.

Practical application and commercialization: Ultimately, research needs to be more closely integrated with practical applications and commercialization. Applying wearable sensor technology to health monitoring, sports training, industrial production, and other fields can create huge business opportunities. Future research needs to focus on how to effectively translate research results into practical products and solutions to meet market needs.

In the field of wearable sensor time series data classification technology, despite many challenges, future research will continue to drive progress in this field, providing more possibilities for health, science research, and industrial applications. By continuously exploring new methods and technologies, we can better understand and utilize wearable sensor data to bring more benefits to society and individuals.

This paper provides a comprehensive review of motion data processing and classification techniques based on wearable sensors, with a specific emphasis on feature extraction and classification models. The rapid development of wearable technology has opened up new opportunities for real-time monitoring of physiological and motion signals, offering rich data sources for health monitoring, sports analysis, and human-computer interaction. Currently, wearable sensor technology has played a crucial role in health, sports, entertainment, production, and industrial fields. Our research focuses on the core of HAR framework, encompassing feature extraction and activity recognition modules. We conducted a detailed exploration of shallow and deep feature extraction techniques, delving into the application of traditional machine learning models and deep learning models in classification. The in-depth analysis aims to propel the application of wearable technology in health monitoring, sports analysis, and human-computer interaction. Certainly, as we delve into the realm of wearable sensor technology, challenges gradually emerge, providing opportunities for future research. Looking ahead, we anticipate witnessing more exciting developments in health, entertainment, production, and social interaction, as wearable technology continues to redefine and enhance our way of life.

Zhang S, Li Y, Zhang S, Shahabi F, Xia S, Deng Y, Alshurafa N. Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances. Sensors (Basel). 2022 Feb 14;22(4):1476. doi: 10.3390/s22041476. PMID: 35214377; PMCID: PMC8879042.

Hu Y, Yan Y. A Wearable Electrostatic Sensor for Human Activity Monitoring. IEEE Trans Instrum Meas. 2022; 71.

Verma U, Tyagi P, Kaur M. “Artificial intelligence in human activity recognition: a review.” International Journal of Sensor Networks. 2023; 41:1; 1.

Peng Z, Zheng S, Zhang X, Yang J, Wu S, Ding C, Lei L, Chen L, Feng G. Flexible Wearable Pressure Sensor Based on Collagen Fiber Material. Micromachines (Basel). 2022 Apr 28;13(5):694. doi: 10.3390/mi13050694. PMID: 35630161; PMCID: PMC9143406.

Ige AO, Noor MMH. A survey on unsupervised learning for wearable sensor-based activity recognition. Appl Soft Comput. 2022; 127: 109363.

Kristoffersson A, Lindén M. A Systematic Review of Wearable Sensors for Monitoring Physical Activity. Sensors (Basel). 2022 Jan 12;22(2):573. doi: 10.3390/s22020573. PMID: 35062531; PMCID: PMC8778538.

Kumar R, Kumar S. Survey on artificial intelligence-based human action recognition in video sequences. 2023; 62:2; 023102.

Putra PU, Shima K, Shimatani K. A deep neural network model for multi-view human activity recognition. PLoS One. 2022 Jan 7;17(1):e0262181. doi: 10.1371/journal.pone.0262181. PMID: 34995315; PMCID: PMC8741063.

Meratwal M, Spicher N, Deserno TM. “Multi-camera and multi-person indoor activity recognition for continuous health monitoring using long short term memory.” 2022; 12037: 64-71.

Deotale D. HARTIV: Human Activity Recognition Using Temporal Information in Videos. Computers, Materials & Continua. 2021; 70: 2;3919-3938.

Jin Z, Li Z, Gan T, Fu Z, Zhang C, He Z, Zhang H, Wang P, Liu J, Ye X. A Novel Central Camera Calibration Method Recording Point-to-Point Distortion for Vision-Based Human Activity Recognition. Sensors (Basel). 2022 May 5;22(9):3524. doi: 10.3390/s22093524. PMID: 35591215; PMCID: PMC9105339.

Jang Y, Jeong I, Younesi Heravi M, Sarkar S, Shin H, Ahn Y. Multi-Camera-Based Human Activity Recognition for Human-Robot Collaboration in Construction. Sensors (Basel). 2023 Aug 7;23(15):6997. doi: 10.3390/s23156997. PMID: 37571779; PMCID: PMC10422633.

Bernaś M, Płaczek B, Lewandowski M. Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes. Sensors (Basel). 2022 Dec 3;22(23):9451. doi: 10.3390/s22239451. PMID: 36502154; PMCID: PMC9739648.

Papel JF, Munaka T. Home Activity Recognition by Sounds of Daily Life Using Improved Feature Extraction Method. IEICE Trans Inf Syst. 2023; E106.D:4;450-458

Wang X, Shang J. Human Activity Recognition Based on Two-Channel Residual–GRU–ECA Module with Two Types of Sensors. 2023; 12: 1622.

Qin Z, Zhang Y, Meng S, Qin Z, Choo KKR. Imaging and fusing time series for wearable sensor-based human activity recognition. Information Fusion. 2020; 53:80-87.

Lara ÓD, Labrador MA. A survey on human activity recognition using wearable sensors. IEEE Communications Surveys and Tutorials. 2013; 15: 3; 1192-1209,

Osmani V, Balasubramaniam S, Botvich D. Human activity recognition in pervasive health-care: Supporting efficient remote collaboration. Journal of Network and Computer Applications. 2008; 31: 4; 628-655.

Dinarević EC, Husić JB, Baraković S. Issues of Human Activity Recognition in Healthcare. 2019 18th International Symposium INFOTEH-JAHORINA, INFOTEH. 2019.

Nadeem A, Jalal A, Kim K. Automatic human posture estimation for sport activity recognition with robust body parts detection and entropy markov model. Multimed Tools Appl. 2021; 80:14; 21465–21498.

Zhuang Z, Xue Y. Sport-Related Human Activity Detection and Recognition Using a Smartwatch. Sensors (Basel). 2019 Nov 16;19(22):5001. doi: 10.3390/s19225001. PMID: 31744127; PMCID: PMC6891622.

Hsu YL, Yang SC, Chang HC, Lai HC.“Human Daily and Sport Activity Recognition Using a Wearable Inertial Sensor Network. IEEE Access. 2018; 6:31715-31728.

Zhang F, Wu TY, Pan JS, Ding G, Li Z. Human motion recognition based on SVM in VR art media interaction environment. Human-centric Computing and Information Sciences. 2019; 9: 1; 1-15.

Fan YC, Wen CY. Real-Time Human Activity Recognition for VR Simulators with Body Area Networks. 2023; 145-146.

Roitberg A, Perzylo A, Somani N, Giuliani M, Rickert M, Knoll A. Human activity recognition in the context of industrial human-robot interaction. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference. APSIPA.

Mohsen S, Elkaseer A, Scholz SG. Industry 4.0-Oriented Deep Learning Models for Human Activity Recognition. IEEE Access. 2021; 9:150508-150521.

Triboan D, Chen L, Chen F, Wang Z. A semantics-based approach to sensor data segmentation in real-time Activity Recognition. Future Generation Computer Systems. 2019; 93: 224-236.

Banos O, Galvez JM, Damas M, Pomares H, Rojas I. Window size impact in human activity recognition. Sensors (Basel). 2014 Apr 9;14(4):6474-99. doi: 10.3390/s140406474. PMID: 24721766; PMCID: PMC4029702.

Noor MHM, Salcic Z, Wang KIK. Adaptive sliding window segmentation for physical activity recognition using a single tri-axial accelerometer. Pervasive Mob Comput. 2017; 38:41-59.

Wang J, Chen Y, Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit Lett. 2019; 119: 3-11.

Nweke HF, The YW, Al-garadi MA, Alo UR. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst Appl. 2018; 105: 233-261.

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJ. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors (Basel). 2016 Mar 24;16(4):426. doi: 10.3390/s16040426. PMID: 27023543; PMCID: PMC4850940.

Figo D, Diniz PC, Ferreira DR, Cardoso JMP. Preprocessing techniques for context recognition from accelerometer data. Pers Ubiquitous Comput. 2010; 14:7;645-662.

Wang Y, Cang S, Yu H. A survey on wearable sensor modality centred human activity recognition in health care. Expert Syst Appl. 2019; 137:167-190.

Altun K, Barshan B, Tunçel O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010; 43:10; 3605-3620.

Zhang M, Sawchuk AA. Human daily activity recognition with sparse representation using wearable sensors. IEEE J Biomed Health Inform. 2013 May;17(3):553-60. doi: 10.1109/jbhi.2013.2253613. PMID: 24592458.

Bao L, Intille SS. Activity recognition from user-annotated acceleration data. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2004; 3001:1-17.

Altun K, Barshan B. Human activity recognition using inertial/magnetic sensor units. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2010; 6219 :38-51.

Khan AM, Lee YK, Lee SY, Kim TS. A triaxial accelerometer-based physical-activity recognition via augmented-signal features and a hierarchical recognizer. IEEE Trans Inf Technol Biomed. 2010 Sep;14(5):1166-72. doi: 10.1109/TITB.2010.2051955. Epub 2010 Jun 7. PMID: 20529753.

Aljarrah AA, Ali AH. Human Activity Recognition using PCA and BiLSTM Recurrent Neural Networks. 2nd International Conference on Engineering Technology and its Applications, IICETA. 2019; 156-160.

Chen Z, Zhu Q, Soh YC, Zhang L. Robust Human Activity Recognition Using Smartphone Sensors via CT-PCA and Online SVM. IEEE Trans Industr Inform. 2017; 13:6;3070-3080.

Sekhon NK, Singh G. Hybrid Technique for Human Activities and Actions Recognition Using PCA, Voting, and K-means. Lecture Notes in Networks and Systems. 2023; 492:351-363.

Yuan G, Zang C, Zeng P. Human Activity Recognition Using LDA And Stochastic Configuration Networks. 2nd International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology, CEI. 2022; 415-420.

Bhuiyan RA, Amiruzzaman M, Ahmed N, Islam MDR. Efficient frequency domain feature extraction model using EPS and LDA for human activity recognition. Proceedings of the 3rd IEEE International Conference on Knowledge Innovation and Invention 2020, ICKII. 2020; 344-347.

Hamed M, Abidine B, Fergani B, Oualkadi AEl. News Schemes for Activity Recognition Systems Using PCA-WSVM, ICA-WSVM, and LDA-WSVM. 2015; 6: 505-521.

Uddin MZ, Lee JJ, Kim TS. Shape-based human activity recognition using independent component analysis and hidden markov model. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2008; 5027: 245-254.

Altun K, Barshan B, Tunçel O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010; 43:10; 3605-3620.

Mäntyjärvi J, Himberg J, Seppänen T. Recognizing human motion with multiple acceleration sensors. Proceedings of the IEEE International Conference on Systems. Man and Cybernetics. 2001; 2:747-752.

Zeng M. Convolutional Neural Networks for human activity recognition using mobile sensors. Proceedings of the 2014 6th International Conference on Mobile Computing, Applications and Services. MobiCASE. 2014; 197-205.

Wang J, Chen Y, Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit Lett. 2019; 119:3-11.

Chen Y, Xue Y. A Deep Learning Approach to Human Activity Recognition Based on Single Accelerometer. Proceedings - 2015 IEEE International Conference on Systems, Man, and Cybernetics SMC 2015. 2016; 1488-1492.

Kautz T, Groh BH, Hannink J, Jensen U, Strubberg H, Eskofier BM. Activity recognition in beach volleyball using a Deep Convolutional Neural Network: Leveraging the potential of Deep Learning in sports. Data Min Knowl Discov. 2017; 31: 6; 1678-1705.

Ronao CA, Cho SB. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst Appl. 2016; 59: 235-244.

Bengio Y. Learning deep architectures for AI. Foundations and Trends in Machine Learning. 2009; 2:1; 1-27.

Ordóñez FJ, Roggen D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors (Basel). 2016 Jan 18;16(1):115. doi: 10.3390/s16010115. PMID: 26797612; PMCID: PMC4732148.

Babu GS,Zhao P, Li XL. Deep convolutional neural network based regression approach for estimation of remaining useful life. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2016; 9642: 214-228.

Ha S, Yun JM, Choi S.“Multi-modal Convolutional Neural Networks for Activity Recognition. Proceedings - 2015 IEEE International Conference on Systems, Man, and Cybernetics, SMC 2015. 2016; 3017-3022.

Ha S, Choi S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. Proceedings of the International Joint Conference on Neural Networks. 2016; 381-388.

Ravi D, Wong C, Lo B, Yang GZ. A Deep Learning Approach to on-Node Sensor Data Analytics for Mobile or Wearable Devices. IEEE J Biomed Health Inform. 2017 Jan;21(1):56-64. doi: 10.1109/JBHI.2016.2633287. Epub 2016 Dec 23. PMID: 28026792.

Jiang W, Yin Z. Human activity recognition using wearable sensors by deep convolutional neural networks. MM 2015 - Proceedings of the 2015 ACM Multimedia Conference. 2015; 1307-1310.

Chen K, Yao L, Gu T, Yu Z, Wang X, Zhang D. Fullie and Wiselie: A Dual-Stream Recurrent Convolutional Attention Model for Activity Recognition. 2017. https://arxiv.org/abs/1711.07661v1

Hachiya H, Sugiyama M, Ueda N. Importance-weighted least-squares probabilistic classifier for covariate shift adaptation with application to human activity recognition. Neurocomputing. 2012; 80: 93-101.

Sani S, Wiratunga N, Massie S, Cooper K. kNN Sampling for Personalised Human Activity Recognition. International Conference on Case-Based Reasoning. 2017; 10339: 330-344.

Fan L, Wang Z, Wang H. Human activity recognition model based on decision tree. Proceedings - 2013 International Conference on Advanced Cloud and Big Data, CBD. 2013; 64-68.

Maswadi K, Ghani NA, Hamid S, Rasheed MB. Human activity classification using Decision Tree and Naïve Bayes classifiers. Multimed Tools Appl. 2021; 80: 14; 21709-21726.

Fan S, Jia Y,Jia C. A Feature Selection and Classification Method for Activity Recognition Based on an Inertial Sensing Unit. 2019; 10: 290.

Jiménez AR, Seco F. Multi-Event Naive Bayes Classifier for Activity Recognition in the UCAmI Cup. 2018; 2:1264.

Kim H, Ahn CR, Engelhaupt D, Lee SH.“Application of dynamic time warping to the recognition of mixed equipment activities in cycle time measurement. Autom Constr. 2018; 87: 225-234.

Zhang H, Dong Y, Li J, Xu D. Dynamic Time Warping under Product Quantization, with Applications to Time-Series Data Similarity Search. IEEE Internet Things J. 2022; 9:14; 11814-11826.

Chathuramali KGM, Rodrigo R. Faster human activity recognition with SVM. International Conference on Advances in ICT for Emerging Regions. ICTer 2012 - Conference Proceedings. 2012; 197-203.

Qian H, Mao Y, Xiang W, Wang Z. Recognition of human activities using SVM multi-class classifier. Pattern Recognit Lett. 2010; 31:2; 100-111.

Noor S, Uddin V. Using ANN for Multi-View Activity Recognition in Indoor Environment. Proceedings - 14th International Conference on Frontiers of Information Technology, FIT 2016. 2017; 258-263.

Bangaru SS,Wang C, Busam SA, Aghazadeh F. ANN-based automated scaffold builder activity recognition through wearable EMG and IMU sensors.” Autom Constr. 2021; 126: 103653.

Zhang L, Wu X, Luo Di. “Human activity recognition with HMM-DNN model. Proceedings of 2015 IEEE 14th International Conference on Cognitive Informatics and Cognitive Computing, ICCI*CC 2015; 192-197.

Cheng X, Huang B. CSI-Based Human Continuous Activity Recognition Using GMM-HMM. IEEE Sens J. 2022; 22:19; 18709-18717.

Xue T, Liu H. Hidden Markov Model and Its Application in Human Activity Recognition and Fall Detection: A Review. Lecture Notes in Electrical Engineering. 2022; 878: 863-869.

Cheng X, Huang B, Zong J. Device-Free Human Activity Recognition Based on GMM-HMM Using Channel State Information. IEEE Access. 2021; 9:76592-76601.

Qin W, Wu HN. Switching GMM-HMM for Complex Human Activity Modeling and Recognition. Proceedings - 2022 Chinese Automation Congress. CAC. 2022; 696-701.

Wang J,Chen Y,Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit Lett. 2019; 119:3-11.

Li X, Zhao P, Wu M, Chen Z, Zhang L. Deep learning for human activity recognition. Neurocomputing. 2021; 444: 214-216.

Kumar P, Chauhan S, Awasthi LK. Human Activity Recognition (HAR) Using Deep Learning: Review, Methodologies, Progress and Future Research Directions. Archives of Computational Methods in Engineering. 2023; 1: 1-41.

Saini S, Juneja A, Shrivastava A.“Human Activity Recognition using Deep Learning: Past, Present and Future. 2023 1st International Conference on Intelligent Computing and Research Trends. ICRT. 2023.

Kanjilal R, Uysal I. The Future of Human Activity Recognition: Deep Learning or Feature Engineering? Neural Process Lett. 2021; 53:1;561-579.

Ha S, Choi S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. Proceedings of the International Joint Conference on Neural Networks. 2016; 381-388.

Cruciani F. Feature learning for Human Activity Recognition using Convolutional Neural Networks: A case study for Inertial Measurement Unit and audio data. CCF Transactions on Pervasive Computing and Interaction. 2020; 2:1; 18-32.

Xu W, Pang Y, Yang Y, Liu Y. Human Activity Recognition Based on Convolutional Neural Network. Proceedings - International Conference on Pattern Recognition. 2018; 165-170.

Tang L, Jia Y, Qian Y, Yi S, Yuan P. Human activity recognition based on mixed CNN with radar multi-spectrogram. IEEE Sens J. 2021; 21:22; 25950-25962.

Chen K, Zhang D, Yao L, Guo B, Yu Z, Liu Y. Deep Learning for Sensor-based Human Activity Recognition.” ACM Computing Surveys (CSUR). 2021; 54: 4.

Xia K, Huang J, Wang H. LSTM-CNN Architecture for Human Activity Recognition. IEEE Access.2020; 8:56855–56866.

Mutegeki R, Han DS. A CNN-LSTM Approach to Human Activity Recognition. 2020 International Conference on Artificial Intelligence in Information and Communication. ICAIIC 2020. 2020; 362–366.

Gajjala KS. Chakraborty B. Human Activity Recognition based on LSTM Neural Network Optimized by PSO Algorithm. 4th IEEE International Conference on Knowledge Innovation and Invention 2021. ICKII 2021.2021; 128–133.

Aghaei A, Nazari A, Moghaddam ME. Sparse Deep LSTMs with Convolutional Attention for Human Action Recognition. SN Comput Sci. 2021; 2:3; 1–14.

Sun Y, Liang D, Wang X, Tang X. DeepID3: Face Recognition with Very Deep Neural Networks. 2015. http://arxiv.org/abs/1502.00873

Cao J, Li W, Wang Q, Yu M. A sensor-based human activity recognition system via restricted boltzmann machine and extended space forest. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2018; 10794 LNCS:87–94.

Mocanu DC. Factored four way conditional restricted Boltzmann machines for activity recognition. Pattern Recognit Lett. 2015; 66:100–108.

Karakus E, Kose H. Conditional restricted Boltzmann machine as a generative model for body-worn sensor signals. IET Signal Processing. 2020; 14:10;725–736.

Zou H, Zhou Y, Yang J, Jiang H, Xie L, Spanos CJ. DeepSense: Device-Free Human Activity Recognition via Autoencoder Long-Term Recurrent Convolutional Network. IEEE International Conference on Communications.2018; 2018.

Sadeghi-Adl Z, Ahmad F. Semi-Supervised Convolutional Autoencoder with Attention Mechanism for Activity Recognition. 31st European Signal Processing Conference (EUSIPCO).2023; 785–789.

Campbell C, Ahmad F. Attention-augmented convolutional autoencoder for radar-based human activity recognition 2020 IEEE International Radar Conference. RADAR. 2020;2020: 990–995.

Garcia KD. An ensemble of autonomous auto-encoders for human activity recognition. 2021; 439: 271–280.

Kwon Y, Kang K, Bae C. Unsupervised learning for human activity recognition using smartphone sensors. Expert Syst Appl. 2014; 41:14;6067–6074.

Chen K, Yao L, Zhang D, Wang X, Chang X, Nie F. A Semisupervised Recurrent Convolutional Attention Model for Human Activity Recognition. IEEE Trans Neural Netw Learn Syst. 2020 May;31(5):1747-1756. doi: 10.1109/TNNLS.2019.2927224. Epub 2019 Jul 19. PMID: 31329134.

Zeng M, Yu T, Wang X, Nguyen LT, Mengshoel OJ, Lane I. Semi-Supervised Convolutional Neural Networks for Human Activity Recognition. Proceedings - 2017 IEEE International Conference on Big Data, Big Data 2017; 2018:522–529.

Stikic M, Larlus D, Ebert S, Schiele B. Weakly Supervised Recognition of Daily Life Activities with Wearable Sensors. IEEE Trans Pattern Anal Mach Intell. 2011 Dec;33(12):2521-37. doi: 10.1109/TPAMI.2011.36. Epub 2011 Feb 24. PMID: 21339526.

Chavarriaga R. The Opportunity challenge: A benchmark database for on-body sensor-based activity recognition. Pattern Recognit Lett. 2013; 34: 15; 2033–2042.

Kurian D, Chirag D, Harsha P, Simhadri V, Jain P, Multiple Instance Learning for Efficient Sequential Data Classification on Resource-constrained Devices. doi: 10.5555/3327546.3327753.

Xiong X, Xiong X, Lian C, Zeng K. A Survey of Motion Data Processing and Classification Techniques Based on Wearable Sensors. IgMin Res. 04 Dec, 2023; 1(1): 105-115. IgMin ID: igmin123; DOI: 10.61927/igmin123; Available at: www.igminresearch.com/articles/pdf/igmin123.pdf

Anyone you share the following link with will be able to read this content:

Address Correspondence:

Chao Lian, School of Control Engineering, Northeastern University at Qinhuangdao, Qinhuangdao 066004, China, Email: [email protected]

How to cite this article:

Xiong X, Xiong X, Lian C, Zeng K. A Survey of Motion Data Processing and Classification Techniques Based on Wearable Sensors. IgMin Res. 04 Dec, 2023; 1(1): 105-115. IgMin ID: igmin123; DOI: 10.61927/igmin123; Available at: www.igminresearch.com/articles/pdf/igmin123.pdf

Copyright: © 2023 Xiong X, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Figure 1: The basic framework of HAR is based on sensor time...

Figure 1: The basic framework of HAR is based on sensor time...

Table 1: Feature types in action recognition tasks....

Table 1: Feature types in action recognition tasks....

Zhang S, Li Y, Zhang S, Shahabi F, Xia S, Deng Y, Alshurafa N. Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances. Sensors (Basel). 2022 Feb 14;22(4):1476. doi: 10.3390/s22041476. PMID: 35214377; PMCID: PMC8879042.

Hu Y, Yan Y. A Wearable Electrostatic Sensor for Human Activity Monitoring. IEEE Trans Instrum Meas. 2022; 71.

Verma U, Tyagi P, Kaur M. “Artificial intelligence in human activity recognition: a review.” International Journal of Sensor Networks. 2023; 41:1; 1.

Peng Z, Zheng S, Zhang X, Yang J, Wu S, Ding C, Lei L, Chen L, Feng G. Flexible Wearable Pressure Sensor Based on Collagen Fiber Material. Micromachines (Basel). 2022 Apr 28;13(5):694. doi: 10.3390/mi13050694. PMID: 35630161; PMCID: PMC9143406.

Ige AO, Noor MMH. A survey on unsupervised learning for wearable sensor-based activity recognition. Appl Soft Comput. 2022; 127: 109363.

Kristoffersson A, Lindén M. A Systematic Review of Wearable Sensors for Monitoring Physical Activity. Sensors (Basel). 2022 Jan 12;22(2):573. doi: 10.3390/s22020573. PMID: 35062531; PMCID: PMC8778538.

Kumar R, Kumar S. Survey on artificial intelligence-based human action recognition in video sequences. 2023; 62:2; 023102.

Putra PU, Shima K, Shimatani K. A deep neural network model for multi-view human activity recognition. PLoS One. 2022 Jan 7;17(1):e0262181. doi: 10.1371/journal.pone.0262181. PMID: 34995315; PMCID: PMC8741063.

Meratwal M, Spicher N, Deserno TM. “Multi-camera and multi-person indoor activity recognition for continuous health monitoring using long short term memory.” 2022; 12037: 64-71.

Deotale D. HARTIV: Human Activity Recognition Using Temporal Information in Videos. Computers, Materials & Continua. 2021; 70: 2;3919-3938.

Jin Z, Li Z, Gan T, Fu Z, Zhang C, He Z, Zhang H, Wang P, Liu J, Ye X. A Novel Central Camera Calibration Method Recording Point-to-Point Distortion for Vision-Based Human Activity Recognition. Sensors (Basel). 2022 May 5;22(9):3524. doi: 10.3390/s22093524. PMID: 35591215; PMCID: PMC9105339.

Jang Y, Jeong I, Younesi Heravi M, Sarkar S, Shin H, Ahn Y. Multi-Camera-Based Human Activity Recognition for Human-Robot Collaboration in Construction. Sensors (Basel). 2023 Aug 7;23(15):6997. doi: 10.3390/s23156997. PMID: 37571779; PMCID: PMC10422633.

Bernaś M, Płaczek B, Lewandowski M. Ensemble of RNN Classifiers for Activity Detection Using a Smartphone and Supporting Nodes. Sensors (Basel). 2022 Dec 3;22(23):9451. doi: 10.3390/s22239451. PMID: 36502154; PMCID: PMC9739648.

Papel JF, Munaka T. Home Activity Recognition by Sounds of Daily Life Using Improved Feature Extraction Method. IEICE Trans Inf Syst. 2023; E106.D:4;450-458

Wang X, Shang J. Human Activity Recognition Based on Two-Channel Residual–GRU–ECA Module with Two Types of Sensors. 2023; 12: 1622.

Qin Z, Zhang Y, Meng S, Qin Z, Choo KKR. Imaging and fusing time series for wearable sensor-based human activity recognition. Information Fusion. 2020; 53:80-87.

Lara ÓD, Labrador MA. A survey on human activity recognition using wearable sensors. IEEE Communications Surveys and Tutorials. 2013; 15: 3; 1192-1209,

Osmani V, Balasubramaniam S, Botvich D. Human activity recognition in pervasive health-care: Supporting efficient remote collaboration. Journal of Network and Computer Applications. 2008; 31: 4; 628-655.

Dinarević EC, Husić JB, Baraković S. Issues of Human Activity Recognition in Healthcare. 2019 18th International Symposium INFOTEH-JAHORINA, INFOTEH. 2019.

Nadeem A, Jalal A, Kim K. Automatic human posture estimation for sport activity recognition with robust body parts detection and entropy markov model. Multimed Tools Appl. 2021; 80:14; 21465–21498.

Zhuang Z, Xue Y. Sport-Related Human Activity Detection and Recognition Using a Smartwatch. Sensors (Basel). 2019 Nov 16;19(22):5001. doi: 10.3390/s19225001. PMID: 31744127; PMCID: PMC6891622.

Hsu YL, Yang SC, Chang HC, Lai HC.“Human Daily and Sport Activity Recognition Using a Wearable Inertial Sensor Network. IEEE Access. 2018; 6:31715-31728.

Zhang F, Wu TY, Pan JS, Ding G, Li Z. Human motion recognition based on SVM in VR art media interaction environment. Human-centric Computing and Information Sciences. 2019; 9: 1; 1-15.

Fan YC, Wen CY. Real-Time Human Activity Recognition for VR Simulators with Body Area Networks. 2023; 145-146.

Roitberg A, Perzylo A, Somani N, Giuliani M, Rickert M, Knoll A. Human activity recognition in the context of industrial human-robot interaction. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference. APSIPA.

Mohsen S, Elkaseer A, Scholz SG. Industry 4.0-Oriented Deep Learning Models for Human Activity Recognition. IEEE Access. 2021; 9:150508-150521.

Triboan D, Chen L, Chen F, Wang Z. A semantics-based approach to sensor data segmentation in real-time Activity Recognition. Future Generation Computer Systems. 2019; 93: 224-236.

Banos O, Galvez JM, Damas M, Pomares H, Rojas I. Window size impact in human activity recognition. Sensors (Basel). 2014 Apr 9;14(4):6474-99. doi: 10.3390/s140406474. PMID: 24721766; PMCID: PMC4029702.

Noor MHM, Salcic Z, Wang KIK. Adaptive sliding window segmentation for physical activity recognition using a single tri-axial accelerometer. Pervasive Mob Comput. 2017; 38:41-59.

Wang J, Chen Y, Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit Lett. 2019; 119: 3-11.

Nweke HF, The YW, Al-garadi MA, Alo UR. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst Appl. 2018; 105: 233-261.

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJ. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors (Basel). 2016 Mar 24;16(4):426. doi: 10.3390/s16040426. PMID: 27023543; PMCID: PMC4850940.

Figo D, Diniz PC, Ferreira DR, Cardoso JMP. Preprocessing techniques for context recognition from accelerometer data. Pers Ubiquitous Comput. 2010; 14:7;645-662.

Wang Y, Cang S, Yu H. A survey on wearable sensor modality centred human activity recognition in health care. Expert Syst Appl. 2019; 137:167-190.

Altun K, Barshan B, Tunçel O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010; 43:10; 3605-3620.

Zhang M, Sawchuk AA. Human daily activity recognition with sparse representation using wearable sensors. IEEE J Biomed Health Inform. 2013 May;17(3):553-60. doi: 10.1109/jbhi.2013.2253613. PMID: 24592458.

Bao L, Intille SS. Activity recognition from user-annotated acceleration data. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2004; 3001:1-17.

Altun K, Barshan B. Human activity recognition using inertial/magnetic sensor units. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2010; 6219 :38-51.

Khan AM, Lee YK, Lee SY, Kim TS. A triaxial accelerometer-based physical-activity recognition via augmented-signal features and a hierarchical recognizer. IEEE Trans Inf Technol Biomed. 2010 Sep;14(5):1166-72. doi: 10.1109/TITB.2010.2051955. Epub 2010 Jun 7. PMID: 20529753.

Aljarrah AA, Ali AH. Human Activity Recognition using PCA and BiLSTM Recurrent Neural Networks. 2nd International Conference on Engineering Technology and its Applications, IICETA. 2019; 156-160.

Chen Z, Zhu Q, Soh YC, Zhang L. Robust Human Activity Recognition Using Smartphone Sensors via CT-PCA and Online SVM. IEEE Trans Industr Inform. 2017; 13:6;3070-3080.

Sekhon NK, Singh G. Hybrid Technique for Human Activities and Actions Recognition Using PCA, Voting, and K-means. Lecture Notes in Networks and Systems. 2023; 492:351-363.

Yuan G, Zang C, Zeng P. Human Activity Recognition Using LDA And Stochastic Configuration Networks. 2nd International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology, CEI. 2022; 415-420.

Bhuiyan RA, Amiruzzaman M, Ahmed N, Islam MDR. Efficient frequency domain feature extraction model using EPS and LDA for human activity recognition. Proceedings of the 3rd IEEE International Conference on Knowledge Innovation and Invention 2020, ICKII. 2020; 344-347.

Hamed M, Abidine B, Fergani B, Oualkadi AEl. News Schemes for Activity Recognition Systems Using PCA-WSVM, ICA-WSVM, and LDA-WSVM. 2015; 6: 505-521.

Uddin MZ, Lee JJ, Kim TS. Shape-based human activity recognition using independent component analysis and hidden markov model. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2008; 5027: 245-254.

Altun K, Barshan B, Tunçel O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010; 43:10; 3605-3620.

Mäntyjärvi J, Himberg J, Seppänen T. Recognizing human motion with multiple acceleration sensors. Proceedings of the IEEE International Conference on Systems. Man and Cybernetics. 2001; 2:747-752.

Zeng M. Convolutional Neural Networks for human activity recognition using mobile sensors. Proceedings of the 2014 6th International Conference on Mobile Computing, Applications and Services. MobiCASE. 2014; 197-205.

Wang J, Chen Y, Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit Lett. 2019; 119:3-11.

Chen Y, Xue Y. A Deep Learning Approach to Human Activity Recognition Based on Single Accelerometer. Proceedings - 2015 IEEE International Conference on Systems, Man, and Cybernetics SMC 2015. 2016; 1488-1492.

Kautz T, Groh BH, Hannink J, Jensen U, Strubberg H, Eskofier BM. Activity recognition in beach volleyball using a Deep Convolutional Neural Network: Leveraging the potential of Deep Learning in sports. Data Min Knowl Discov. 2017; 31: 6; 1678-1705.

Ronao CA, Cho SB. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst Appl. 2016; 59: 235-244.

Bengio Y. Learning deep architectures for AI. Foundations and Trends in Machine Learning. 2009; 2:1; 1-27.

Ordóñez FJ, Roggen D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors (Basel). 2016 Jan 18;16(1):115. doi: 10.3390/s16010115. PMID: 26797612; PMCID: PMC4732148.

Babu GS,Zhao P, Li XL. Deep convolutional neural network based regression approach for estimation of remaining useful life. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2016; 9642: 214-228.

Ha S, Yun JM, Choi S.“Multi-modal Convolutional Neural Networks for Activity Recognition. Proceedings - 2015 IEEE International Conference on Systems, Man, and Cybernetics, SMC 2015. 2016; 3017-3022.

Ha S, Choi S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. Proceedings of the International Joint Conference on Neural Networks. 2016; 381-388.

Ravi D, Wong C, Lo B, Yang GZ. A Deep Learning Approach to on-Node Sensor Data Analytics for Mobile or Wearable Devices. IEEE J Biomed Health Inform. 2017 Jan;21(1):56-64. doi: 10.1109/JBHI.2016.2633287. Epub 2016 Dec 23. PMID: 28026792.

Jiang W, Yin Z. Human activity recognition using wearable sensors by deep convolutional neural networks. MM 2015 - Proceedings of the 2015 ACM Multimedia Conference. 2015; 1307-1310.

Chen K, Yao L, Gu T, Yu Z, Wang X, Zhang D. Fullie and Wiselie: A Dual-Stream Recurrent Convolutional Attention Model for Activity Recognition. 2017. https://arxiv.org/abs/1711.07661v1

Hachiya H, Sugiyama M, Ueda N. Importance-weighted least-squares probabilistic classifier for covariate shift adaptation with application to human activity recognition. Neurocomputing. 2012; 80: 93-101.

Sani S, Wiratunga N, Massie S, Cooper K. kNN Sampling for Personalised Human Activity Recognition. International Conference on Case-Based Reasoning. 2017; 10339: 330-344.

Fan L, Wang Z, Wang H. Human activity recognition model based on decision tree. Proceedings - 2013 International Conference on Advanced Cloud and Big Data, CBD. 2013; 64-68.

Maswadi K, Ghani NA, Hamid S, Rasheed MB. Human activity classification using Decision Tree and Naïve Bayes classifiers. Multimed Tools Appl. 2021; 80: 14; 21709-21726.

Fan S, Jia Y,Jia C. A Feature Selection and Classification Method for Activity Recognition Based on an Inertial Sensing Unit. 2019; 10: 290.

Jiménez AR, Seco F. Multi-Event Naive Bayes Classifier for Activity Recognition in the UCAmI Cup. 2018; 2:1264.

Kim H, Ahn CR, Engelhaupt D, Lee SH.“Application of dynamic time warping to the recognition of mixed equipment activities in cycle time measurement. Autom Constr. 2018; 87: 225-234.

Zhang H, Dong Y, Li J, Xu D. Dynamic Time Warping under Product Quantization, with Applications to Time-Series Data Similarity Search. IEEE Internet Things J. 2022; 9:14; 11814-11826.

Chathuramali KGM, Rodrigo R. Faster human activity recognition with SVM. International Conference on Advances in ICT for Emerging Regions. ICTer 2012 - Conference Proceedings. 2012; 197-203.

Qian H, Mao Y, Xiang W, Wang Z. Recognition of human activities using SVM multi-class classifier. Pattern Recognit Lett. 2010; 31:2; 100-111.

Noor S, Uddin V. Using ANN for Multi-View Activity Recognition in Indoor Environment. Proceedings - 14th International Conference on Frontiers of Information Technology, FIT 2016. 2017; 258-263.

Bangaru SS,Wang C, Busam SA, Aghazadeh F. ANN-based automated scaffold builder activity recognition through wearable EMG and IMU sensors.” Autom Constr. 2021; 126: 103653.

Zhang L, Wu X, Luo Di. “Human activity recognition with HMM-DNN model. Proceedings of 2015 IEEE 14th International Conference on Cognitive Informatics and Cognitive Computing, ICCI*CC 2015; 192-197.

Cheng X, Huang B. CSI-Based Human Continuous Activity Recognition Using GMM-HMM. IEEE Sens J. 2022; 22:19; 18709-18717.

Xue T, Liu H. Hidden Markov Model and Its Application in Human Activity Recognition and Fall Detection: A Review. Lecture Notes in Electrical Engineering. 2022; 878: 863-869.

Cheng X, Huang B, Zong J. Device-Free Human Activity Recognition Based on GMM-HMM Using Channel State Information. IEEE Access. 2021; 9:76592-76601.

Qin W, Wu HN. Switching GMM-HMM for Complex Human Activity Modeling and Recognition. Proceedings - 2022 Chinese Automation Congress. CAC. 2022; 696-701.

Wang J,Chen Y,Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit Lett. 2019; 119:3-11.

Li X, Zhao P, Wu M, Chen Z, Zhang L. Deep learning for human activity recognition. Neurocomputing. 2021; 444: 214-216.

Kumar P, Chauhan S, Awasthi LK. Human Activity Recognition (HAR) Using Deep Learning: Review, Methodologies, Progress and Future Research Directions. Archives of Computational Methods in Engineering. 2023; 1: 1-41.

Saini S, Juneja A, Shrivastava A.“Human Activity Recognition using Deep Learning: Past, Present and Future. 2023 1st International Conference on Intelligent Computing and Research Trends. ICRT. 2023.